Nano Banana Pro API on WaveSpeed: How to Call It + Pricing Notes

Ever stared at the Nano Banana Pro API on WaveSpeed docs and thought “What on earth am I supposed to do next?” You’re not alone. I’m Dora, I personally tested dozens of APIs, and I’ve had my fair share of undocumented endpoints and surprise billing emails. In this guide, I walk you step-by-step through how to call Nano Banana Pro API cleanly and avoid pricing pitfalls that can blindside your project budget.

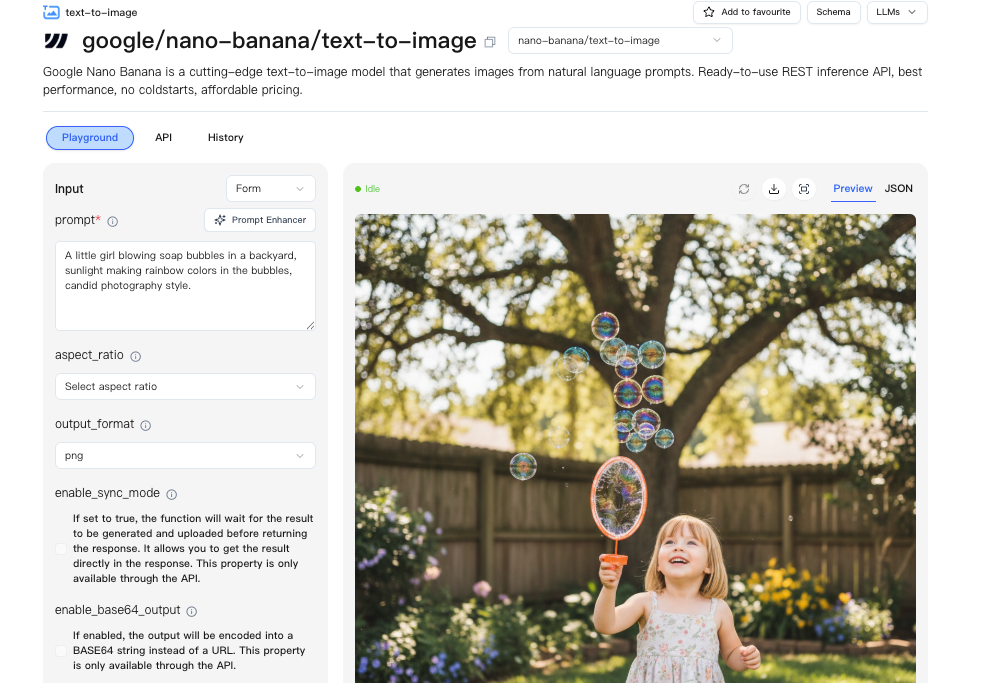

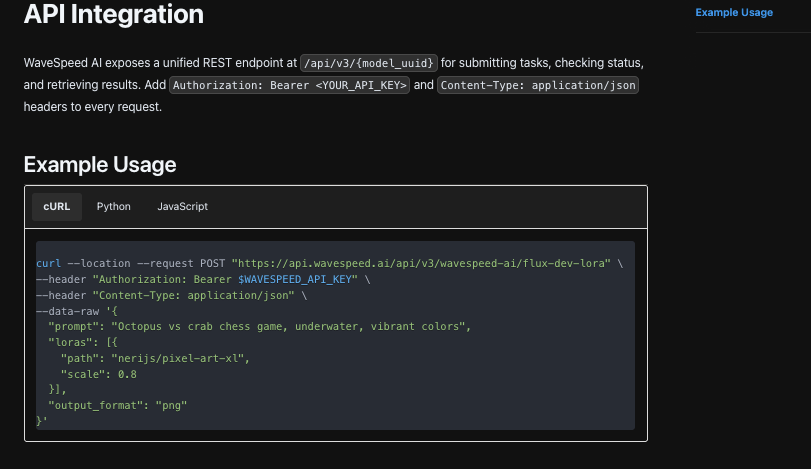

Endpoint / flow

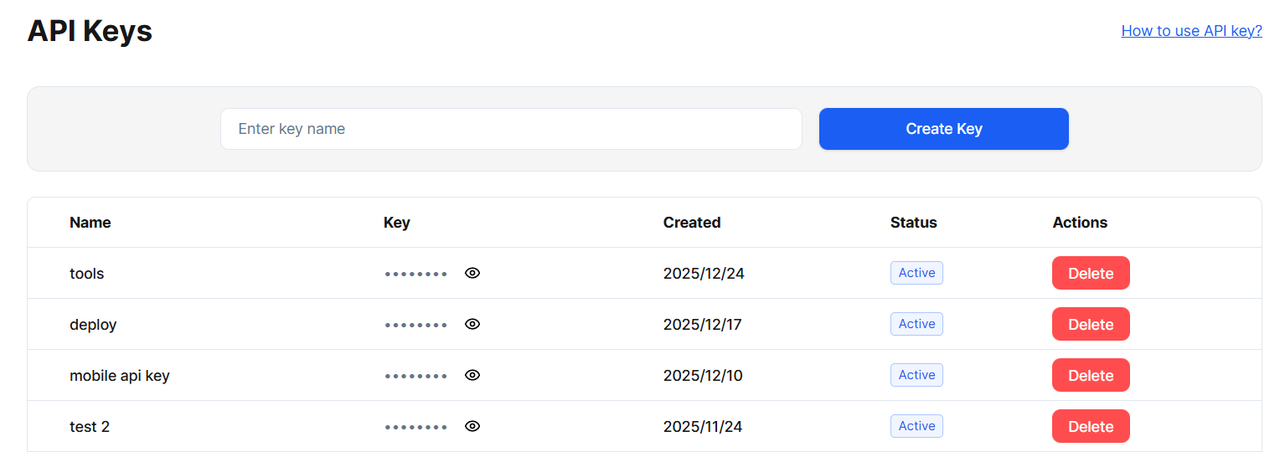

I didn’t switch my whole stack. I wrapped Nano Banana Pro behind a small adapter service, so I could toggle between providers without ripping out code. WaveSpeed’s dashboard made that easier than I expected. One endpoint, consistent auth, and a simple quota view that didn’t make me hunt.

My flow went like this:

- A small pre-processor cleaned inputs (lowercasing jargon, removing extra whitespace, unifying timezone stamps).

- I sent requests to the Nano Banana Pro endpoint with a stable system instruction and a short set of examples.

- I cached stable prompts and common responses. Nothing fancy, just a local TTL cache and WaveSpeed’s own response caching for identical payloads.

- I stored traces: prompt hash, parameters, latency, token counts, and error codes when they showed up.

What helped most was the predictability. The endpoint didn’t try to do clever routing on my behalf. If I asked for Nano Banana Pro, I got it. During my runs, median latency hovered around a steady range, and the variance didn’t spike during US work hours as much as I expected. Not perfect, but calmer than my baseline.

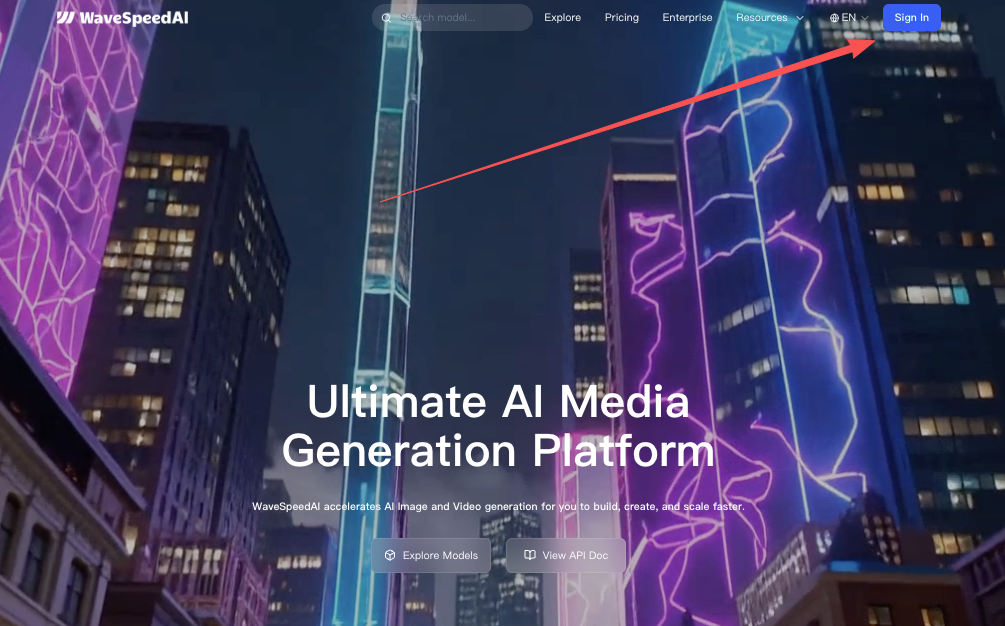

If you care more about stable routing and transparent usage than chasing the cheapest line item, try our Wavespeed. We focus on predictable endpoints, clean auth, and usage visibility that doesn’t require guesswork.

If you care more about stable routing and transparent usage than chasing the cheapest line item, try our Wavespeed. We focus on predictable endpoints, clean auth, and usage visibility that doesn’t require guesswork.

One small wrinkle: the streaming option worked, but in my use it didn’t reduce perceived latency enough to matter. For short texts, streaming felt like extra ceremony. For longer summaries, it was pleasant but not necessary. I left it off for everything except manual review sessions.

Key params

I try not to tweak knobs unless there’s a reason. A handful actually mattered here.

- Model selection: Nano Banana Pro stayed consistent across my test period (as of January 2026). No surprise swaps. This steadiness is the main reason I kept going.

- Temperature: For tagging and classification, I parked it near zero. That cut down inconsistency. For summarization with a bit of synthesis, 0.3–0.4 gave me smoother phrasing without drifting off brief.

- Max tokens: I set tight ceilings for short tasks to avoid ballooned outputs. For long summaries, I gave generous limits and relied on a hard character count in post.

- System instruction: A short, plain instruction beat long policy blocks. I used one sentence to set the role, plus a tiny rubric for “don’t infer, show evidence when unsure.” The more I added, the more it hedged.

- Top-p vs. temperature: I kept top-p fixed at 1.0 while nudging temperature. Mixing both made differences harder to trace.

What caught me off guard was how sensitive the model was to example placement. Two concrete examples right after the instruction worked better than five mixed throughout. When I moved examples to the very end, quality dipped on edge cases. The API didn’t enforce format, but consistency paid off: same field names, same order, same punctuation.

Quality knobs

Beyond temperature and token caps, a few moves changed the feel of outputs:

- Short primers beat long policies. One-line intent + two examples produced fewer over-explanations than a page of guidance.

- Evidence prompts helped. Asking “quote the phrase that triggered this tag” reduced imaginative tagging by a lot. It also made QA calmer because I could spot hallucinations quickly.

- Soft constraints > hard constraints. Saying “aim for 3–5 bullets” worked better than “exactly 4 bullets.” The model respected boundaries without getting jumpy.

- Deterministic framing: I added a smidge of structure at the end, “Return: label, confidence (0–1), evidence (text).” It kept outputs tidy without feeling like a schema prison.

Quality dipped in two cases: messy OCR inputs and domain slang. The fix wasn’t more clever prompting. It was just a tiny pre-step: trim junk characters, unify hyphens, and list unknown terms at the top as “terms seen.” Once I did that, the model stopped guessing weird labels. This didn’t save me time on day one, but by the fourth run I noticed I wasn’t rereading as much. Less mental effort counts.

Pricing considerations

I didn’t chase the lowest line item. I wanted predictable spend for predictable output.

I didn’t chase the lowest line item. I wanted predictable spend for predictable output.

Across my tests, Nano Banana Pro landed in the mid-range for cost per thousand tokens on WaveSpeed. The quiet benefit was more consistent token usage. Because the model didn’t ramble with the right prompt shape, I saw fewer surprise spikes. My average output length for summaries stabilized after I added the soft bullet constraint.

Two small habits reduced costs without hurting quality:

- Prompt caching for recurring instructions and examples (WaveSpeed did part of this: my adapter did the rest so identical requests short-circuit).

- Early exits for no-op cases. If the input is too short or obviously irrelevant, skip the call and return a default. This sounds obvious, but I tend to forget it until I see the bill.

If you’re dealing with spiky workloads, the pay-as-you-go model made sense for me. If your usage is steady and heavy, you might look at committed credits, but only after a month of real numbers. I wouldn’t pre-commit based on a hunch.

Batch tips

I ran two weekly batches during the trial. A few patterns helped:

- Small, stable batch size. I settled on chunks of 50 items. Concurrency was modest (10–12). Throughput was fine, and error handling stayed sane.

- Retry budget with backoff. One fast retry for transient issues, then a longer backoff, then park the item. No infinite loops.

- Idempotency tokens. Same input, same hash, same request key. If a retry landed, I didn’t pay twice or double-log.

- Pre-validation. I rejected inputs missing required fields before sending anything to the API. Boring, but it saved time.

The one friction was rate limit transparency. WaveSpeed’s dashboard showed usage clearly, but per-minute ceilings felt a bit opaque during peak. I solved it by adding a moving average guard in my adapter and treating 429s as signals, not errors. After that, the batches ran without drama.

Error handling

I kept error handling simple and observable, following REST API error handling best practices.

- Timeouts: I set a conservative client timeout. If a request ran long, I marked it for a slower retry lane. Long requests often completed on retry: the key was not clogging the fast lane.

- 4xx vs 5xx: 4xx got parked for manual review unless it was a rate limit. 5xx got a short retry burst. This avoided burning cycles on bad inputs.

- Guardrails in outputs: I asked the model to always include a confidence score. When the score dipped under 0.6, I sent the item to a human review queue. Simple triage, fewer regrets.

- Logging: I logged the raw prompt and response only for flagged cases, not everything. Privacy stayed cleaner, and my logs were smaller.

There were a few genuine model misses, confident but wrong labels on sarcasm. I didn’t try to prompt my way out of that. I added a sarcasm check as a separate lightweight pass and only then applied the main tagger. Two steps, less mess.

Example payload logic(non-code explanation)

Here’s the shape of what I sent, in plain language.

- System role: one sentence on the job. For example, “You are a careful classifier that tags marketing copy with a small set of labels and points to the words that drove the decision.”

- Context: a tiny glossary for any odd terms, plus two crisp examples, one clean, one tricky.

- Instruction: what to return and in what order (label, confidence, evidence), and the tone constraint (brief, no hedging language).

- Input: the raw text, untouched except for whitespace cleanup.

- Limits: a requested maximum length for the evidence and a ceiling on the number of labels.

On the adapter side, I generated a stable hash from the system role + examples + instruction. If that hash matched a previous request with the same input, I checked the cache. If not, I called WaveSpeed’s Nano Banana Pro endpoint with temperature and token caps set for the workload. I parsed the output by keys, not by position, so small phrasing changes didn’t break anything.

If the response lacked any required key, I didn’t ask the model to fix itself in place. I re-issued the prompt with a short reminder: “Return the three keys only.” One retry max. After that, it went to the review queue. This kept the system from looping itself into nonsense.

If the response lacked any required key, I didn’t ask the model to fix itself in place. I re-issued the prompt with a short reminder: “Return the three keys only.” One retry max. After that, it went to the review queue. This kept the system from looping itself into nonsense.