DeepSeek V4 vs Claude Opus 4.5 for Coding: Benchmark Comparison

Hey guys! Dora here. Last Sunday morning, I was hopping between my editor and a chat window to patch a flaky test, and the model kept inventing an import that didn’t exist. Not a big deal, just one of those paper cuts that slows your hands. I wanted to see if switching models would lighten the load, not just on wall-clock time, but on the mental effort it takes to trust what lands in my repo.

So I spent the last week (Jan 27–Feb 1, 2026) running a simple, repeatable loop: same tasks, same repo snapshots, alternating DeepSeek V4 and Claude Opus 4.5. This isn’t a lab study. It’s the kind of checking I’d do before wiring a model into CI. If you’re also weighing DeepSeek V4 vs Claude Opus 4.5 for coding, these are the notes I’d want to read before making the switch.

Current Benchmark Leaders

SWE-bench Verified Rankings

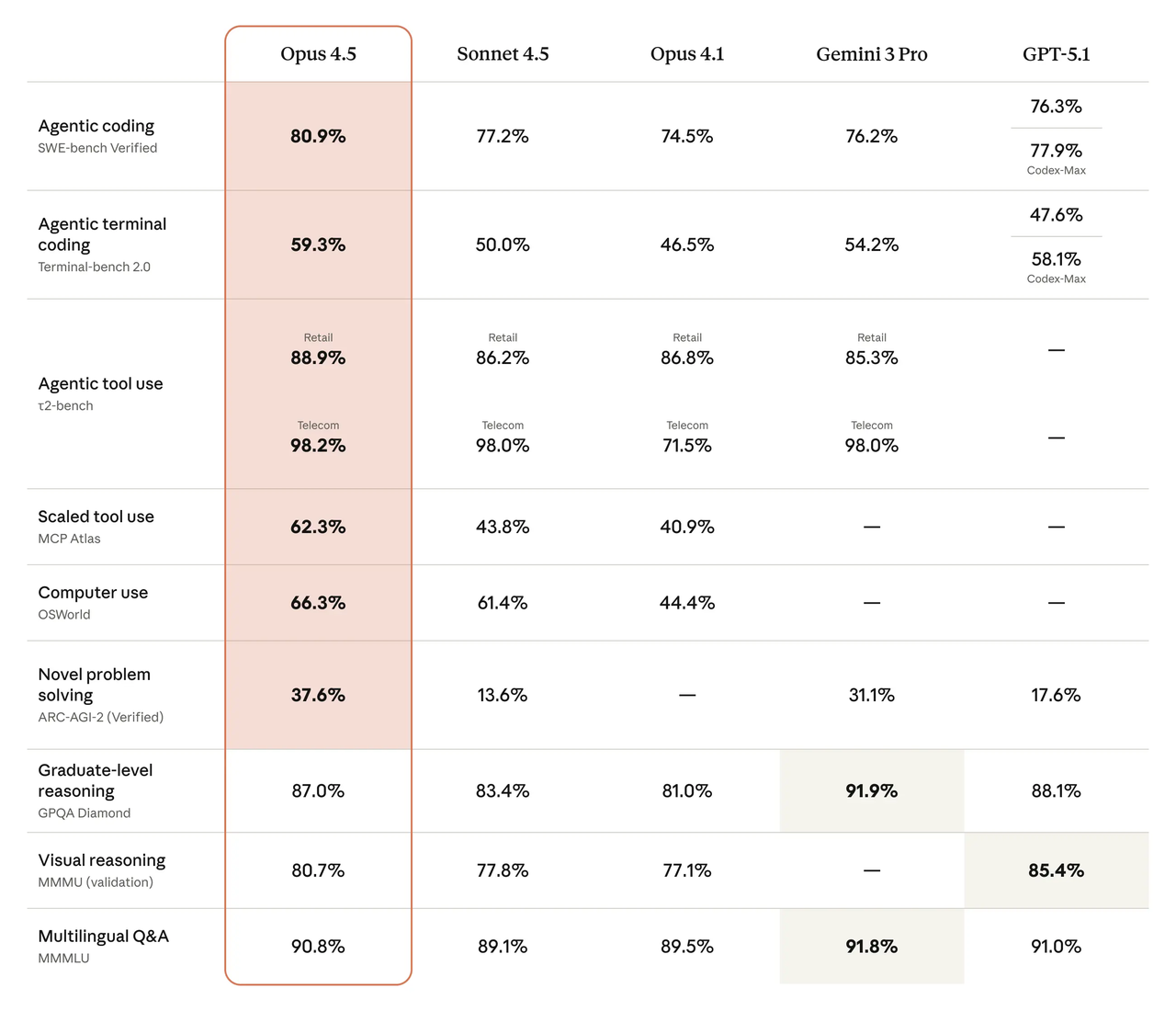

When I need a quick sense of where the wind is blowing, I start with public leaderboards. On the SWE-bench Verified leaderboard, both DeepSeek’s recent models and Anthropic’s newer Claude family sit near the top, with small gaps that change week to week as prompts, tools, and evaluation harnesses shift. What matters to me isn’t the single number, it’s the pattern: which models solve end-to-end issues consistently without tool crutches, and how sensitive they are to prompt tweaks.

My quick read, as of early February 2026:

- DeepSeek V4 shows strong movement on multi-file, repo-scale tasks when you give it all the context it asks for. It benefits from long prompts and explicit file maps.

- Claude Opus 4.5 puts up steady results and tends to regress less when I cut context or strip system messages. It’s not flashy, but the floor feels high.

HumanEval Scores

HumanEval is narrower, short coding problems with unit tests, but it’s a useful smell test for out-of-the-box code generation. Current summaries on the OpenAI HumanEval repo and community trackers like the EvalPlus leaderboard place both models in the top tier. I don’t anchor on exact pass@1 here: I watch for stability across seeds and how often a model leans on language tricks instead of writing direct, idiomatic code.

In my runs, DeepSeek V4 sometimes produced longer, more “explanatory” solutions, fine, but not always what I want in a tight diff. Claude Opus 4.5 more often returned compact functions that sailed through tests without extra commentary. Benchmarks hint at this difference: hands-on work made it obvious.

In my runs, DeepSeek V4 sometimes produced longer, more “explanatory” solutions, fine, but not always what I want in a tight diff. Claude Opus 4.5 more often returned compact functions that sailed through tests without extra commentary. Benchmarks hint at this difference: hands-on work made it obvious.

Where Each Model Excels

Long Context (DeepSeek)

If you want to reproduce this setup end to end, I put together a short DeepSeek V4 quick start guide that walks through the chat and API basics I’m relying on here.

I gave both models a real task: refactor a small FastAPI service that had quietly grown into a tangle. About 14 files mattered, plus a README that was… optimistic. I zipped the repo snapshot and fed file summaries along with a call graph I generated with a quick script. DeepSeek V4 felt calm with the sprawl. It kept track of cross-file effects and didn’t panic when I asked for a staged plan: interfaces first, tests second, handlers last. The surprising part was how well it used structural hints, when I handed it a simple “map” of filenames and responsibilities, it stopped suggesting edits to files that didn’t exist.

Two practical notes:

- It needed breathing room. When I trimmed context too aggressively, it got cautious and started asking to see files I’d already provided. Once I gave it the full picture, it moved cleanly.

- It handled “What am I missing?” prompts well. I’d ask for edge cases based on the test suite and it surfaced three I’d forgotten: empty auth headers, a broken pagination param, and a slow path in error logging.

This didn’t save time at first. The initial setup, packaging context, writing a short file map, took maybe 20 minutes. But after a few runs, the mental load dropped. I wasn’t juggling as many “did I tell it X?” worries. If your coding day looks like large diffs spread across multiple modules, DeepSeek V4 has a steady hand when the context gets wide.

Code Reliability (Claude)

Claude Opus 4.5 won me over in a different way: fewer sharp edges. When I asked for a minimal patch, it gave me one. When I asked for a three-step plan with a dry run, it didn’t hallucinate commands. And it resisted the urge to “improve” things I didn’t ask about.

Claude Opus 4.5 won me over in a different way: fewer sharp edges. When I asked for a minimal patch, it gave me one. When I asked for a three-step plan with a dry run, it didn’t hallucinate commands. And it resisted the urge to “improve” things I didn’t ask about.

A small example: I had a flaky test around timezone math. My prompt was blunt: “Fix the test without changing production code, and explain the root cause in one sentence.” Claude suggested parameterizing the tz fixture and adjusting a single assertion to use an aware datetime. It passed on the first try. DeepSeek also fixed it, but it tried to refactor the helper in the same breath. Not wrong, just heavier than I wanted.

Over five tasks, Claude’s diffs were consistently smaller. Fewer imports appeared from nowhere. And when it did guess, it left a tidy note: “Assuming pytz is available: if not, replace with zoneinfo.” That kind of hedged suggestion is easy to audit.

Two limits showed up:

- Claude played it safe on performance. In one case, it chose clarity over a simple O(n) improvement that DeepSeek pointed out immediately. I had to nudge it: “Optimize under the same constraints.” It did, but it wouldn’t jump first.

- With very long prompts, I hit the ceiling faster. Summaries helped, but DeepSeek felt less cramped when I wanted the model to “hold the whole app in its head.”

If your day is mostly surgical patches, test repairs, and glue code around APIs, Claude Opus 4.5 keeps changes lean and predictable. That, in practice, is reliability I can feel.

How to Run Your Own Comparison

If you’re on the fence about DeepSeek V4 vs Claude Opus 4.5 for coding, a short, boring experiment tells you more than any leaderboard. Here’s the loop I used, tweak freely.

If you’re on the fence about DeepSeek V4 vs Claude Opus 4.5 for coding, a short, boring experiment tells you more than any leaderboard. Here’s the loop I used, tweak freely.

1. Pick tasks that echo your week

- One repo chore (refactor or module extraction)

- One flaky test

- One API integration change

- One small algorithm tweak

Keep each under 45 minutes. Timebox the interaction, not just the model’s generation.

2. Freeze inputs

- Pin a specific commit. Don’t move the target while you test.

- Decide what the model can see: full files vs. excerpts. Write a short file map if you’re passing excerpts.

- Use the same system prompt style for both models. I keep it plain: “You are a helpful coding assistant. Prefer minimal diffs and runnable code.”

3. Write prompts you can reuse

- Task: “Here’s the goal, constraints, and tests.”

- Context: file list or summaries, plus known pitfalls.

- Output format: “Propose a plan (bullets), then the diff, then a one-sentence risk note.”

4. Capture the same signals for both

- Attempts to passing tests (1–N)

- Lines changed in diff (rough is fine)

- Notes you had to write for the model (“Stop editing X”, “Use existing helper Y”)

- Time to first green test

5. Guard for leakage

- Disable tools unless you plan to compare tool use. If one model shells out and the other doesn’t, you’re not testing the same thing.

- If you allow retrieval, point both at the same docs snapshot.

6. Sanity-check with benchmarks, don’t worship them

- Glance at SWE-bench Verified to see if your results look wildly off. If they do, check your prompts before you blame the model.

- For bite-size problems, skim HumanEval samples on the official repo or run a few locally. Consistency over a handful of seeds is more revealing than a single run.

7. Optional: add a tiny rubric

Score 1–5 on:

- Diff minimalism (did it touch only what it needed?)

- Fixture discipline (tests, env, dependencies)

- Recovery behavior (does it self-correct when you point out a miss?)

- Explanation quality (one or two clear sentences, not a blog post)

What I watch for in practice

- Does the model respect constraints the first time?

- When it’s wrong, is it wrong in a way that’s easy to spot?

- Do I feel safe letting it propose a patch while I’m context-switching?

This worked for me, your mileage may vary. The point isn’t to crown a winner: it’s to see which one reduces your cognitive drag with your code, on your schedule.