image-to-image

FLUX.1 Kontext Dev Ultra-Fast is an open-source image-to-image model that edits images from text prompts with open weights and code. Ready-to-use REST inference API, best performance, no coldstarts, affordable pricing.

Idle

Ihre Anfrage kostet $0.02 pro Durchlauf.

Für $1 können Sie dieses Modell ungefähr 50 Mal ausführen.

Noch etwas:

BeispieleAlle anzeigen

README

FLUX Kontext Dev Ultra Fast — wavespeed-ai/flux-kontext-dev-ultra-fast

FLUX.1 Kontext Dev Ultra Fast is a low-latency image-to-image editing model optimized for rapid iteration. Provide a source image and a natural-language edit instruction, and it performs targeted or global edits while aiming to preserve the original context when requested—ideal for interactive workflows, batch revisions, and quick creative exploration.

Key capabilities

- Ultra-fast instruction-based image editing from a single input image

- Strong preservation when you explicitly specify what must remain unchanged

- Works well for iterative edits: refine the same image across multiple passes

- Great for practical edits: color changes, background swaps, text edits, cleanup, and light style transforms

Typical use cases

- Fast retouching and cleanup (lighting/exposure, minor imperfections)

- Color/material edits (e.g., product variants)

- Background replacement for marketing creatives

- Text replacement on posters, packaging, UI mockups

- Rapid style experimentation with minimal turnaround time

Pricing

$0.02 per image.

Cost per run = num_images × $0.02 Example: num_images = 4 → $0.08

Inputs and outputs

Input:

- One source image (upload or public URL)

- One edit instruction (prompt)

Output:

- One or more edited images (controlled by num_images)

Parameters

- prompt: Edit instruction describing what to change and what to keep

- image: Source image

- width / height: Output resolution

- num_inference_steps: More steps can improve fidelity but increases latency

- guidance_scale: Higher values follow the prompt more strongly; too high may over-edit

- num_images: Number of variations generated per run

- seed: Fixed value for reproducibility; -1 for random

- output_format: jpeg or png

- enable_base64_output: Return BASE64 instead of a URL (API only)

- enable_sync_mode: Wait for generation and return results directly (API only)

Prompting guide

Use a clear “preserve + edit + constraints” structure:

Template: Keep [what must stay]. Change [what to edit]. Ensure [constraints]. Match [lighting/shadows/style consistency].

Example prompts

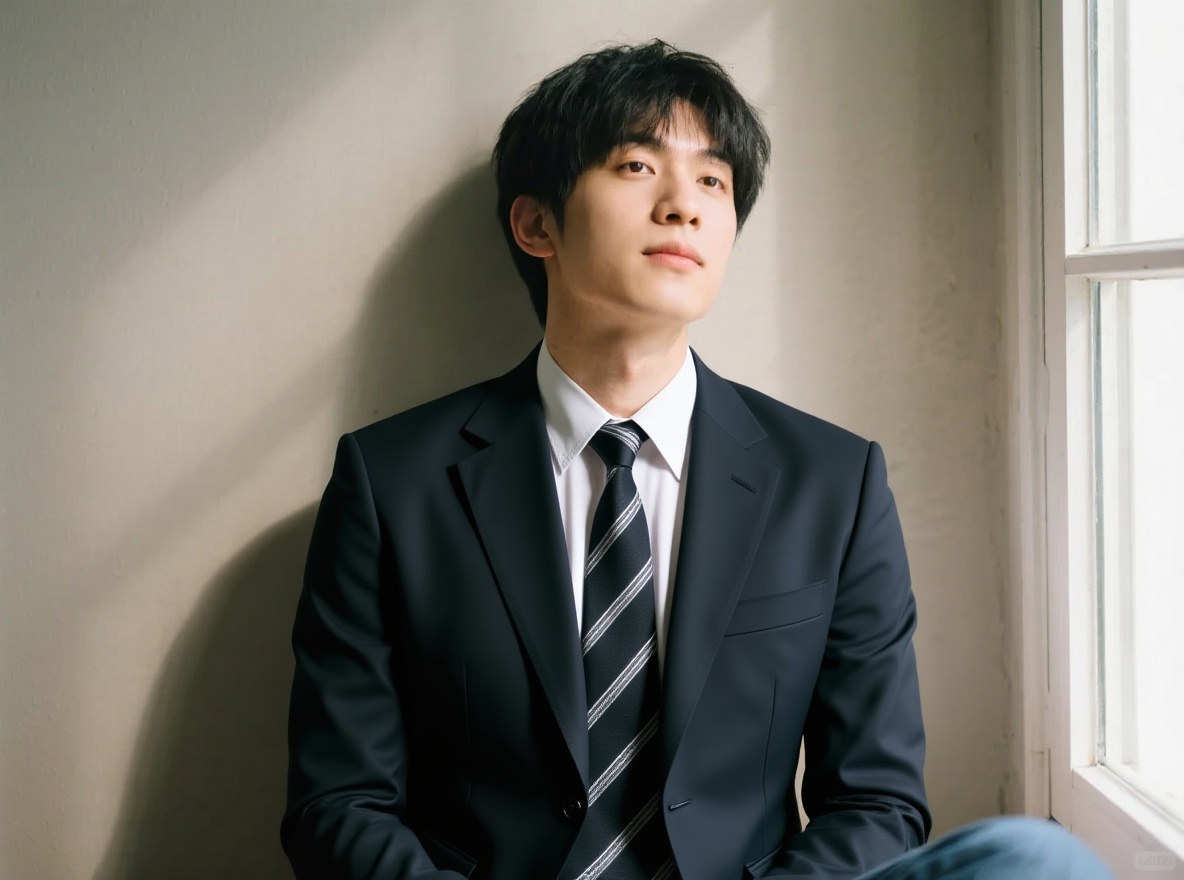

- Keep the subject’s face and pose unchanged. Replace the background with a clean studio backdrop. Match the lighting and shadow direction.

- Change the shirt color to navy blue. Keep fabric texture and wrinkles consistent.

- Replace the label text with “WaveSpeedAI”, keeping the same font style, size, and perspective. Do not modify anything else.

- Remove the objects on the table. Keep the table surface intact and realistic.

- Apply a soft cinematic grade with slightly warmer tones, without changing composition or identity.

Best practices

- Do one change per run for maximum control, then iterate.

- If the edit drifts, lower guidance_scale and strengthen the preserve clause.

- Fix seed for reproducible comparisons and stable iteration.

- Match output width/height to the input aspect ratio to avoid distortion.