Introducing WaveSpeedAI Hunyuan Avatar on WaveSpeedAI

Try Wavespeed Ai Hunyuan Avatar for FREEIntroducing Hunyuan Avatar on WaveSpeedAI: Transform Any Image Into a Talking or Singing Video

Creating professional talking avatar videos has traditionally required expensive equipment, skilled actors, and hours of post-production work. Today, we’re thrilled to announce that Hunyuan Avatar is now available on WaveSpeedAI, bringing Tencent’s cutting-edge audio-driven human animation technology to creators, marketers, and developers worldwide.

With just a single image and an audio clip, you can now generate stunning 480p or 720p videos up to 120 seconds long—all through a simple REST API call with no cold starts and affordable pricing starting at just $0.15 per 5 seconds.

What is Hunyuan Avatar?

Hunyuan Avatar (HunyuanVideo-Avatar) is a high-fidelity audio-driven human animation model jointly developed by Tencent’s Hunyuan Team and Tencent Music’s Tienqin Lab. Built on an innovative multimodal diffusion transformer (MM-DiT) architecture, it represents a significant leap forward in digital human generation technology.

Unlike earlier talking head algorithms like Wav2Lip or SadTalker that focused primarily on modifying mouth regions, Hunyuan Avatar generates complete, dynamic animations including natural head movements, expressive facial animations, and even full-body motion. The model has been benchmarked against state-of-the-art methods including Hallo, EMO, and EchoMimic, demonstrating superior video quality, more natural facial expressions, and better lip synchronization accuracy.

What sets Hunyuan Avatar apart is its ability to handle multi-style avatars—from photorealistic humans to cartoon characters, 3D-rendered figures, and even anthropomorphic characters—at multiple scales including portrait, upper-body, and full-body compositions.

Key Features

- Single Image to Video: Transform any portrait image into a dynamic talking or singing video with just one reference photo

- High-Fidelity Lip Sync: Advanced audio analysis ensures precise synchronization between speech and lip movements

- Emotion Transfer and Control: The Audio Emotion Module (AEM) extracts emotional cues from reference images and transfers them to generated videos for expressive, emotionally authentic content

- Multi-Character Support: Generate dialogue videos featuring multiple characters with independent audio injection through the Face-Aware Audio Adapter (FAA)

- Character Consistency: Proprietary character image injection technology maintains strong identity preservation across different poses and expressions

- Multi-Style Generation: Works with photorealistic images, anime, cartoon, 3D-rendered, and artistic styles

- Flexible Resolution: Generate videos in 480p or 720p quality

- Extended Duration: Create videos up to 120 seconds long

- Speaking and Singing: Supports both speech-driven and music-driven animations

Real-World Use Cases

E-Commerce and Product Marketing

Create compelling product demonstration videos without hiring actors or setting up studios. E-commerce businesses can generate virtual hosts to introduce products, conduct live streaming simulations, or produce multilingual marketing content at scale. Major platforms across Tencent Music Entertainment Group are already using this technology in production.

Content Creation and Social Media

YouTubers, TikTok creators, and social media marketers can produce engaging avatar-based content quickly. Whether you need a consistent virtual presenter for your channel or want to create character-driven narratives, Hunyuan Avatar delivers professional results without the overhead of traditional video production.

Corporate Training and Education

Develop training materials featuring consistent virtual instructors who can deliver content in multiple languages. Educational institutions can create engaging lecture videos that maintain student attention through dynamic, expressive presentations.

Entertainment and Gaming

Game developers and entertainment studios can prototype character animations, create promotional content, or generate in-game cutscenes. The multi-character dialogue capability opens possibilities for creating interactive storytelling experiences.

Accessibility and Localization

Transform existing audio content into accessible video formats. Localize video content by generating new talking head videos in different languages while maintaining consistent character representation across regions.

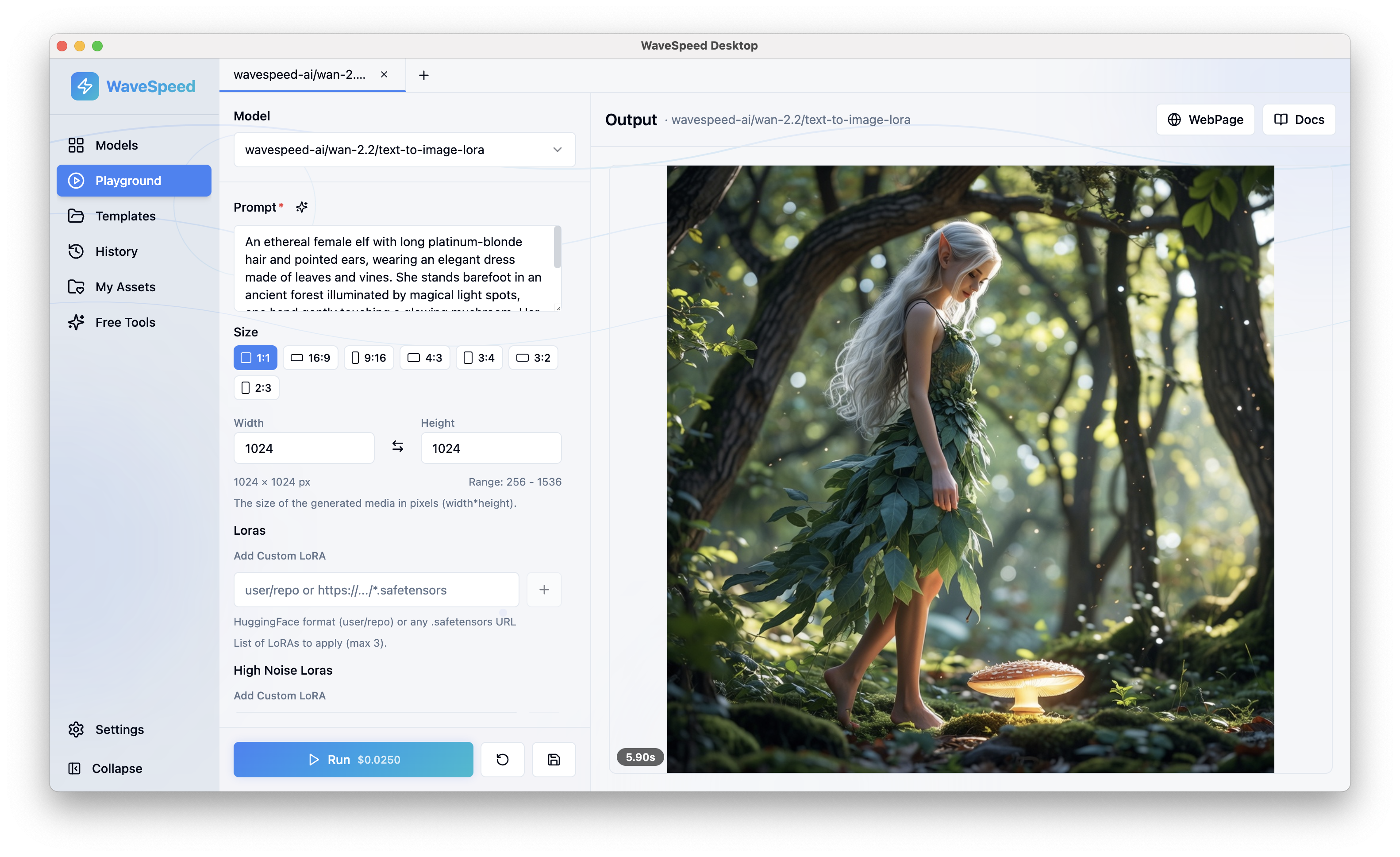

Getting Started with WaveSpeedAI

Integrating Hunyuan Avatar into your workflow is straightforward with WaveSpeedAI’s REST API. Here’s what makes our implementation stand out:

No Cold Starts: Your API calls execute immediately without waiting for model initialization—critical for production applications where latency matters.

Affordable Pricing: Starting at just $0.15 per 5 seconds of generated video, Hunyuan Avatar on WaveSpeedAI is accessible for projects of any scale.

Simple Integration: Our REST API follows standard patterns, making it easy to integrate with your existing applications, whether you’re building a SaaS product, a content pipeline, or a creative tool.

Reliable Performance: WaveSpeedAI’s infrastructure ensures consistent, high-quality output for every generation request.

To start generating avatar videos, you’ll need:

- A reference image (portrait, upper-body, or full-body)

- An audio file (speech or music)

- Optional: An emotion reference image for fine-grained emotional control

Visit the Hunyuan Avatar model page to access the API documentation and start building.

The Technical Edge

Hunyuan Avatar achieves its impressive results through three key innovations:

The Character Image Injection Module replaces conventional addition-based conditioning, eliminating the mismatch between training and inference that plagued earlier models. This ensures your generated character maintains consistent identity even during dynamic movements.

The Audio Emotion Module (AEM) provides fine-grained control over the emotional expression in generated videos. By analyzing an emotion reference image, the model can transfer specific emotional cues to create more authentic, contextually appropriate expressions.

The Face-Aware Audio Adapter (FAA) uses latent-level face masks to isolate audio-driven characters, enabling independent audio injection for multi-character scenarios—a capability that significantly expands creative possibilities.

Conclusion

Hunyuan Avatar on WaveSpeedAI represents a new frontier in AI-powered video generation. By combining Tencent’s state-of-the-art research with WaveSpeedAI’s optimized inference infrastructure, we’re making professional-quality avatar videos accessible to everyone.

Whether you’re a solo creator looking to add production value to your content, a marketing team seeking efficient ways to produce localized campaigns, or a developer building the next generation of interactive applications, Hunyuan Avatar provides the tools you need.

Ready to bring your images to life? Try Hunyuan Avatar on WaveSpeedAI today and discover what’s possible when cutting-edge AI meets reliable, affordable infrastructure.