Introducing WaveSpeedAI LTX 2 19b Image-to-Video LoRA on WaveSpeedAI

Try Wavespeed Ai Ltx.2 19b Image To Video Lora for FREEIntroducing WaveSpeedAI LTX-2 19B Image-to-Video LoRA on WaveSpeedAI

The future of AI-powered video creation just got a major upgrade. Today, we’re thrilled to announce the arrival of LTX-2 19B Image-to-Video LoRA on WaveSpeedAI—a groundbreaking model that transforms static images into dynamic, high-quality videos with synchronized audio and unprecedented customization through LoRA adapters.

This isn’t just another image-to-video model. LTX-2 represents a fundamental leap forward as the first DiT-based (Diffusion Transformer) audio-video foundation model, combining cutting-edge architecture with practical, production-ready features that creators, marketers, and developers have been waiting for.

What is LTX-2 19B Image-to-Video LoRA?

At its core, LTX-2 19B is a 19-billion-parameter diffusion transformer model designed to animate still images while generating perfectly synchronized audio—all in a single pass. Unlike traditional approaches that require separate audio generation and alignment steps, LTX-2 produces coherent motion, dialogue, ambient sounds, and music simultaneously, ensuring that every visual element matches its corresponding audio perfectly.

The LoRA (Low-Rank Adaptation) variant takes this capability further by enabling you to apply up to three custom LoRA adapters during generation. This means you can inject specific visual styles, maintain consistent character identities across projects, or align outputs with precise brand guidelines—all without retraining the entire 19-billion-parameter model.

Think of LoRAs as specialized “style lenses” that modify the model’s output. Train a LoRA once on your brand’s visual identity, product designs, or character artwork, then apply it to every generation to ensure perfect consistency. This approach dramatically reduces computational overhead compared to full model fine-tuning while delivering professional-grade customization.

Key Features That Set LTX-2 Apart

Synchronized Audio-Video Generation

The standout innovation is simultaneous audio-video synthesis. When you animate an image of a person speaking, the model generates appropriate lip movements, dialogue, ambient environmental sounds, and background music—all synchronized perfectly with the visual motion. This eliminates the tedious post-production work of aligning separately generated audio tracks.

Triple LoRA Support

Apply up to three LoRA adapters per generation, each with adjustable scale weights from 0 to 4. Whether you’re blending a character LoRA with a style LoRA and a lighting LoRA, or combining brand-specific adapters for different product lines, the system gives you fine-grained control over how each adapter influences the final output.

Flexible Resolution and Duration

Choose from 480p, 720p, or 1080p output resolutions to balance quality against rendering cost. Generate videos ranging from 5 to 20 seconds in length—long enough for engaging social media content, product demos, or creative experiments without unnecessary computational overhead.

High-Fidelity Motion Preservation

The model excels at maintaining the composition, lighting, and subject framing of your input image while adding natural, temporally consistent motion. Feed it a portrait, and it won’t arbitrarily change the subject’s appearance or background—it simply brings the scene to life.

Production-Ready Performance

With WaveSpeedAI’s infrastructure, you get enterprise-grade reliability: no cold starts, predictable pricing, and REST API access for seamless integration into existing workflows. Whether you’re generating one video or scaling to thousands, the platform handles the infrastructure complexity.

Real-World Use Cases

Custom Character Animation

Content creators and animation studios can train character LoRAs on specific designs, then animate those characters across dozens or hundreds of scenes while maintaining perfect visual consistency. Imagine producing an entire animated series where every character looks identical across episodes—without manual frame-by-frame correction.

Brand Content at Scale

Marketing teams can train LoRAs on brand style guides, product catalogs, and visual identity documents. Every generated video automatically adheres to color palettes, design language, and aesthetic standards, ensuring brand consistency across campaigns without bottlenecking creative output through manual review cycles.

Product Visualization

E-commerce platforms can animate product photography with trained LoRAs that emphasize specific material properties, lighting conditions, or presentation styles. A single product image becomes dozens of unique video variations showcasing different angles, contexts, or use scenarios.

Artistic Style Transfer

Artists and designers can apply painterly, anime, photorealistic, or other aesthetic LoRAs to bring static artwork to life. A concept art sketch becomes a moving animation that preserves the original artistic intent while adding dynamic storytelling elements.

Educational Content

Educators can animate historical photographs, scientific diagrams, or instructional illustrations with synchronized narration and ambient audio, creating engaging multimedia learning materials from existing static assets.

Getting Started on WaveSpeedAI

Using LTX-2 19B Image-to-Video LoRA on WaveSpeedAI is straightforward:

-

Upload your starting image — Either drag and drop a file or provide a public URL to the image you want to animate.

-

Write a descriptive prompt — Detail the motion, action, style, and audio elements you want. The more specific your prompt, the better the model can align output with your vision. For example: “A woman turns her head toward the camera and smiles while soft ambient music plays in the background.”

-

Add LoRA adapters (optional) — Click ”+ Add Item” to include custom LoRA weights. Provide the URL to each LoRA file and set the scale multiplier (typically 0.5-2.0 for most applications).

-

Configure resolution and duration — Select 480p for quick drafts, 720p for balanced quality, or 1080p for final delivery. Choose video length from 5 to 20 seconds based on your content needs.

-

Run the generation — Click the run button and let WaveSpeedAI’s infrastructure handle the rest. No cold starts means your video begins processing immediately.

The model outputs a video file with embedded synchronized audio, ready for download or further post-production.

Pricing That Scales With Your Needs

LTX-2 19B Image-to-Video LoRA uses transparent, usage-based pricing that scales with resolution and duration:

- 480p, 5s: $0.075 per run

- 720p, 5s: $0.10 per run

- 1080p, 5s: $0.15 per run

- 480p, 10s: $0.15 per run

- 720p, 10s: $0.20 per run

- 1080p, 10s: $0.30 per run

- 720p, 20s: $0.40 per run

- 1080p, 20s: $0.60 per run

The LoRA-enabled version carries a 25% premium over the standard LTX-2 variant to account for the additional computational overhead of adapter loading and blending. For most use cases, the customization capability easily justifies the incremental cost.

LoRA Best Practices

To get the most out of custom LoRA adapters:

-

Start with scale 1.0 and adjust incrementally. Lower scales (0.5-0.8) apply subtle stylistic influence, while higher scales (1.5-2.5) produce stronger effects.

-

Test LoRA combinations carefully. Multiple LoRAs can interact unpredictably, so validate new combinations with small test runs before scaling production.

-

Match LoRAs to content type. Character LoRAs work best for character-focused content; style LoRAs excel at aesthetic consistency; lighting LoRAs shine in product visualization.

-

Let audio adapt automatically. The model generates contextually appropriate audio even with heavy style customization, so you don’t need separate audio LoRAs in most scenarios.

Why Choose WaveSpeedAI?

Running LTX-2 locally demands significant GPU resources—an RTX 4090 needs 9-12 minutes for a 10-second 4K clip, while lower-spec hardware can take 20+ minutes. WaveSpeedAI eliminates this barrier with cloud-based inference optimized for speed and cost-efficiency:

- No cold starts: Your jobs begin processing immediately, with no infrastructure warmup delays.

- Predictable pricing: Pay only for what you generate, with transparent per-run costs.

- Production reliability: Enterprise-grade uptime and performance for mission-critical workflows.

- REST API access: Integrate video generation directly into your applications with simple HTTP requests.

Ready to Animate Your World?

LTX-2 19B Image-to-Video LoRA represents the convergence of cutting-edge AI research and practical production needs. Whether you’re creating branded content at scale, animating custom characters, or exploring artistic possibilities, this model delivers the quality, control, and performance required for professional work.

Start generating today at https://wavespeed.ai/models/wavespeed-ai/ltx-2-19b/image-to-video-lora and experience the future of AI-powered video creation.

Related Articles

Introducing WaveSpeedAI LTX 2 19b Image-to-Video on WaveSpeedAI

Introducing WaveSpeedAI LTX 2 19b Text-to-Video LoRA on WaveSpeedAI

Introducing WaveSpeedAI LTX 2 19b Text-to-Video on WaveSpeedAI

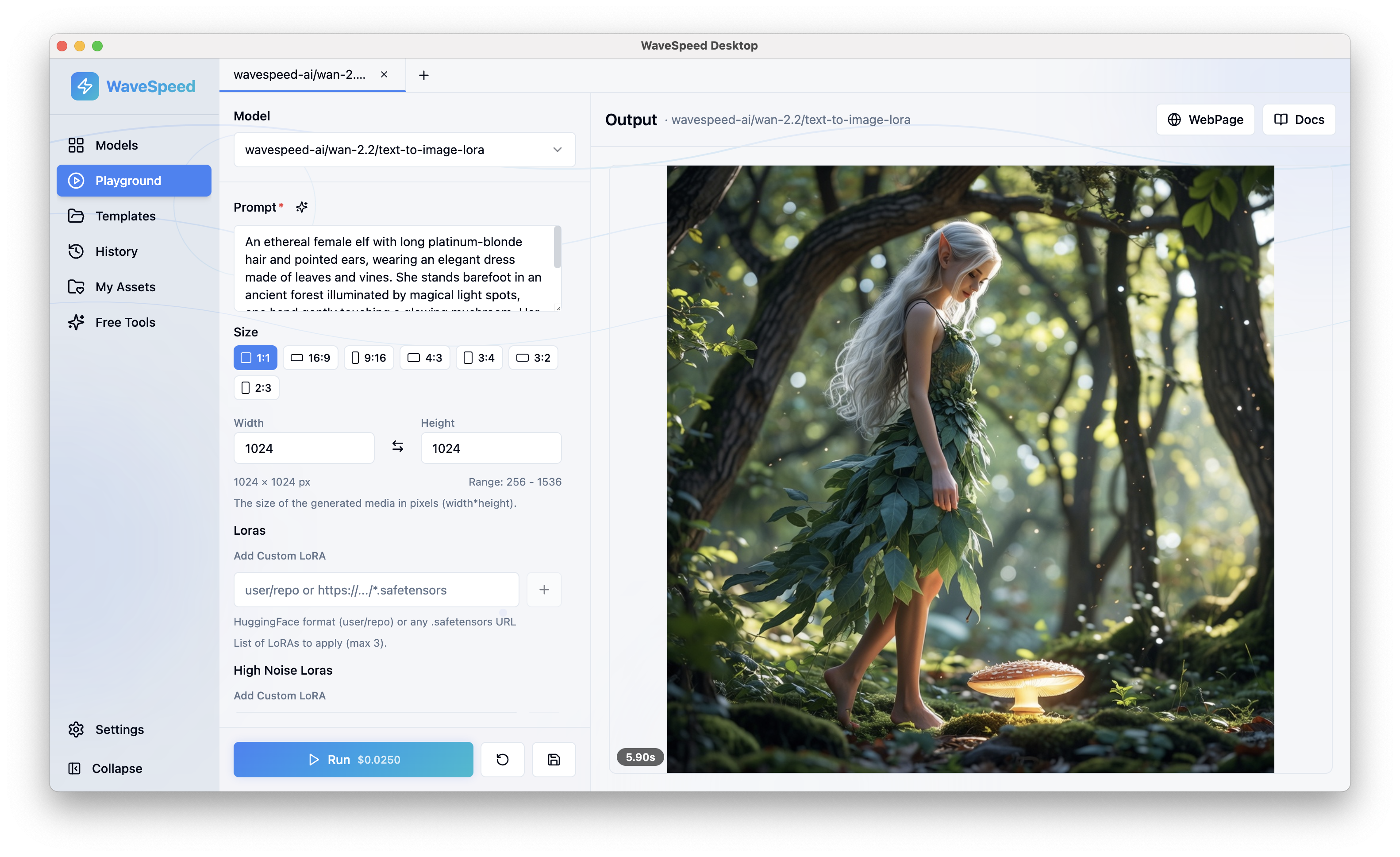

WaveSpeed Desktop: The Best Desktop AI Studio App

WaveSpeedAI vs Hedra: Which AI Video Platform is Best?