Introducing WaveSpeedAI LTX 2 19b Image-to-Video on WaveSpeedAI

Try Wavespeed Ai Ltx.2 19b Image To Video for FREETransform Static Images Into Living Stories With Synchronized Audio

The gap between static imagery and dynamic video has long been a creative bottleneck. While image-to-video AI models have emerged over the past year, they’ve largely delivered silent clips that require separate audio production workflows. Today, WaveSpeedAI brings you LTX-2 19B Image-to-Video, the first DiT-based audio-video foundation model that generates synchronized sound and motion in a single pass—transforming how creators animate visual content.

What Makes LTX-2 Different

LTX-2 represents a fundamental architectural breakthrough in generative AI. Built on a 19-billion-parameter Diffusion Transformer (DiT) architecture, this model doesn’t just animate your images—it orchestrates a complete audio-visual experience. Developed by Lightricks and open-sourced in January 2026, LTX-2 eliminates the traditional divide between video and audio generation pipelines.

When you upload a reference image and describe the motion you want, LTX-2 preserves your original composition—the subject, framing, and lighting—while generating natural movement and contextually appropriate sound. Rain sounds emerge with falling droplets. Jazz music plays as virtual musicians perform. Crowd noise swells as animated characters interact. The audio isn’t added afterward; it’s generated alongside the visuals based on the same understanding of your scene.

Key Capabilities

Native 4K Output at High Frame Rates

LTX-2 supports resolutions up to 1080p on WaveSpeedAI, with native 4K capability in the underlying model. Generate at up to 50 frames per second for smooth, professional-quality motion that matches broadcast standards.

Flexible Duration Control

Create clips from 5 to 20 seconds in length—long enough for social media posts, product demos, marketing spots, and narrative sequences without requiring manual stitching.

Three Resolution Tiers for Every Workflow

- 480p: Fast iteration at $0.06 per 5 seconds—perfect for rapid prototyping and testing different motion prompts

- 720p: Balanced quality and cost at $0.08 per 5 seconds—the default choice for most production work

- 1080p: Maximum detail at $0.12 per 5 seconds—ideal for final deliverables and high-end content

Preservation of Input Composition

Unlike models that reinterpret your image, LTX-2 maintains fidelity to your original visual—making it reliable for brand assets, product photography, and any scenario where consistency matters.

Automatic Audio Synchronization

Sound is generated based on visual motion and prompt context. Describe specific audio cues in your prompt (“rain,” “jazz piano,” “ocean waves”) or let the model infer ambient sound from the action.

Real-World Applications

Product Marketing

Animate product photography with subtle motion and ambient sound. A watch face glints as the second hand moves. A beverage pours with realistic liquid physics and sound. Static product shots become engaging video ads without additional audio production costs.

Social Media Content

Transform static posts into animated content that captures attention in crowded feeds. Portrait photos gain lifelike movement. Landscape shots come alive with natural motion and environmental audio. Content creators can produce more engaging material without video editing expertise.

Brand Storytelling

Storyboard frames and concept art become animated previews. Marketing teams can visualize campaigns before full production. Agencies can present motion concepts to clients faster and more affordably than traditional animatics.

Educational Content

Animate diagrams, historical photographs, and instructional images. A static anatomy illustration becomes a rotating 3D-style animation. Historical photos gain subtle movement that brings the past to life. Complex concepts become more engaging through motion.

Portrait Animation

Bring headshots and portraits to life with natural facial movements, blinking, and ambient sound. Professional photographers can offer animated portraits as premium products. Personal photos become memorable keepsakes with added dimension.

Getting Started on WaveSpeedAI

WaveSpeedAI makes LTX-2 19B accessible through a simple REST API—no GPU infrastructure, no cold starts, no complex setup. Here’s the basic workflow:

import wavespeed

output = wavespeed.run(

"wavespeed-ai/ltx-2-19b/image-to-video",

{

"image": "your-image.jpg",

"prompt": "gentle rain falling, ambient nature sounds",

"resolution": "720p",

"duration": 10

}

)

print(output["outputs"][0]) # Video URL with synchronized audioBest Practices:

- Start with 480p resolution to experiment with different motion prompts and find the right animation style

- Use high-quality, sharp, well-exposed images for optimal results

- Keep motion descriptions focused—one clear action per prompt yields better temporal consistency

- Specify audio cues when you need particular sounds (“jazz piano,” “city traffic,” “ocean waves”)

- Use a fixed seed value when comparing prompt variations to isolate the effects of prompt changes

- Scale up to 720p for client reviews and 1080p for final delivery

The model typically generates a 10-second clip in under a minute, with costs scaling linearly based on duration and resolution. A 15-second video at 720p costs just $0.24—dramatically less than traditional video production or even concatenating multiple shorter clips from competing platforms.

Why This Matters Now

Image-to-video generation has evolved rapidly over the past year, but most models deliver silent output. Creators have been forced into separate workflows: generate video, then add audio in post-production. LTX-2’s unified approach changes this calculus.

According to recent performance analyses, LTX-2’s visual fidelity outperforms many competing models while maintaining computational efficiency. The DiT architecture—adapted from cutting-edge research in joint audio-visual generation—enables the model to understand spatial relationships and generate coherent motion with matched audio cues.

For enterprise users, the open-source foundation of LTX-2 means transparency and long-term viability. For individual creators, WaveSpeedAI’s infrastructure removes the complexity of running a 19-billion-parameter model locally, offering instant inference with predictable pricing.

Production-Ready With No Compromise

LTX-2 isn’t an experimental preview—it’s a production-ready model with extensive optimization. The underlying architecture has been quantized and optimized for NVIDIA hardware, reducing model size by approximately 30% and improving inference speed up to 2x compared to earlier versions.

When comparing cost efficiency, generating a 60-second narrative with LTX-2 on WaveSpeedAI costs roughly 50% less than creating six 10-second clips with traditional cloud video platforms—and you get synchronized audio included.

Start Creating Today

Static images are just the beginning. With LTX-2 19B on WaveSpeedAI, every photograph becomes a potential animated sequence with natural sound. Whether you’re producing social content, marketing materials, or narrative projects, this model collapses the production timeline from hours to minutes.

Ready to animate your images?

Access LTX-2 19B Image-to-Video now at https://wavespeed.ai/models/wavespeed-ai/ltx-2-19b/image-to-video

No cold starts. No infrastructure. No separate audio production. Just fast, affordable, synchronized audio-video generation from your static images—available through a simple API call.

Related Articles

Introducing WaveSpeedAI LTX 2 19b Image-to-Video LoRA on WaveSpeedAI

Introducing WaveSpeedAI LTX 2 19b Text-to-Video LoRA on WaveSpeedAI

Introducing WaveSpeedAI LTX 2 19b Text-to-Video on WaveSpeedAI

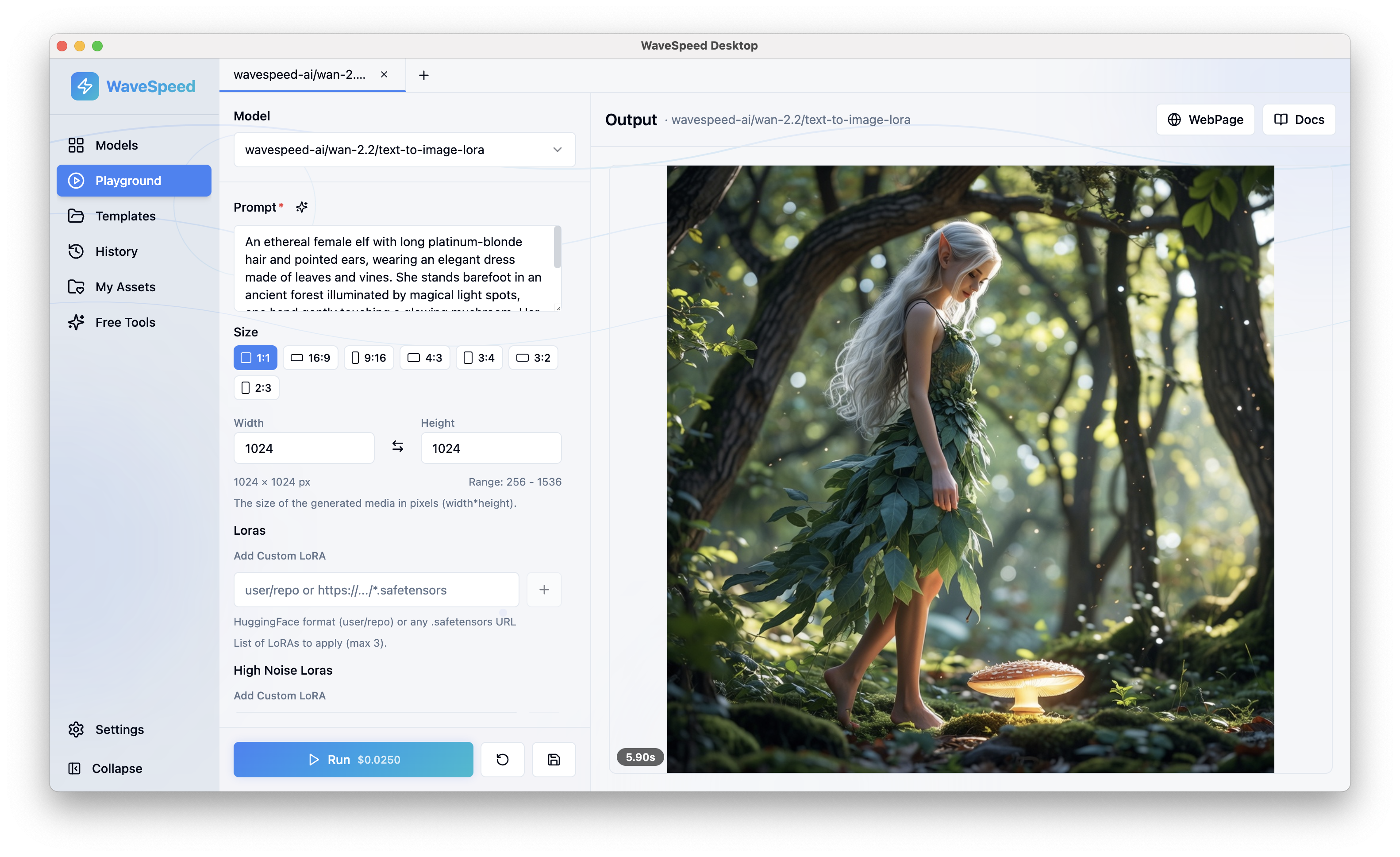

WaveSpeed Desktop: The Best Desktop AI Studio App

WaveSpeedAI vs Hedra: Which AI Video Platform is Best?