Introducing WaveSpeedAI LTX 2 19b Text-to-Video LoRA on WaveSpeedAI

Try Wavespeed Ai Ltx.2 19b Text To Video Lora for FREEIntroducing WaveSpeedAI LTX-2 19B Text-to-Video with LoRA: Personalized AI Video Generation at Scale

The future of AI-generated video content just got more personal. WaveSpeedAI is excited to announce the launch of LTX-2 19B Text-to-Video LoRA, the first DiT-based audio-video foundation model that combines synchronized audio-video generation with full custom LoRA adapter support. This breakthrough enables creators to generate videos with personalized styles, consistent characters, and unique visual aesthetics—all from a simple text prompt.

What is LTX-2 19B Text-to-Video LoRA?

LTX-2 19B Text-to-Video LoRA builds on Lightricks’ groundbreaking LTX-2 architecture, which made waves in the AI community as the first production-ready model to generate synchronized video and audio in a single pass. While the base model already delivers impressive results with native 4K capability and 50 fps rendering, the LoRA version takes it further by allowing you to apply up to three custom LoRA (Low-Rank Adaptation) adapters simultaneously.

LoRA technology has revolutionized how AI models can be personalized without retraining the entire architecture. By fine-tuning specific parameters, LoRAs enable the model to understand and reproduce specialized styles, character designs, brand identities, or artistic movements—all while maintaining the core model’s powerful generation capabilities.

At its core, this 19-billion parameter Diffusion Transformer model leverages advanced multimodal AI techniques to process text prompts and generate videos with matching soundscapes. The synchronized audio generation means footsteps, ambient sounds, and environmental audio automatically align with visual content, creating immersive experiences that previously required manual sound design.

Key Features That Set It Apart

Custom Style Personalization: Apply up to three LoRA adapters per generation, enabling unprecedented control over visual aesthetics. Whether you’re maintaining brand consistency across marketing videos, creating content with recurring characters, or exploring unique artistic styles, LoRAs give you the flexibility to shape outputs to your exact specifications.

True Audio-Video Synchronization: Unlike competing models that generate video first and require separate audio production workflows, LTX-2 creates both simultaneously in a single pass. This approach ensures perfect alignment between visual and auditory elements—from the rustle of leaves matching on-screen movement to dialogue sync in character animations.

Flexible Output Options: Generate videos in multiple resolutions (480p, 720p, and 1080p) with support for both landscape (16:9) and vertical (9:16) aspect ratios. Duration ranges from 5 to 20 seconds, giving you the flexibility to create quick social media clips or longer narrative sequences.

Efficient Architecture: The model employs a high compression ratio of 1:192 through its Video-VAE component, enabling efficient processing while maintaining visual fidelity. This technical efficiency translates to faster generation times and lower computational costs compared to similarly capable models.

Parameter Control: Fine-tune LoRA scale weights from 0 to 4, allowing subtle influences (0.5-1.0) for light stylization or stronger effects (1.0-2.0) for dramatic transformations. This granular control means you can dial in exactly the right amount of customization for each project.

Real-World Use Cases

Brand Content Creation: Marketing teams can train LoRAs on brand visual guidelines and consistently generate on-brand video content at scale. Maintain color palettes, design languages, and visual identities across hundreds of video assets without manual editing.

Character Animation: Content creators developing episodic content or educational series can use character LoRAs to ensure the same protagonist appears consistently across videos. This opens up new possibilities for AI-assisted storytelling where character continuity was previously a major challenge.

Artistic Video Production: Digital artists and filmmakers can apply style LoRAs trained on specific artistic movements—from anime aesthetics to painterly effects—creating unique visual experiences that blend AI capabilities with human creative vision.

Social Media Content: Influencers and content creators can develop signature visual styles through custom LoRAs, then rapidly generate vertical-format videos optimized for TikTok, Instagram Reels, and YouTube Shorts while maintaining their distinctive aesthetic.

E-learning and Training: Educational content producers can use LoRAs to create consistent visual environments and characters, making multi-video course sequences feel cohesive and professionally produced without expensive video production teams.

Getting Started on WaveSpeedAI

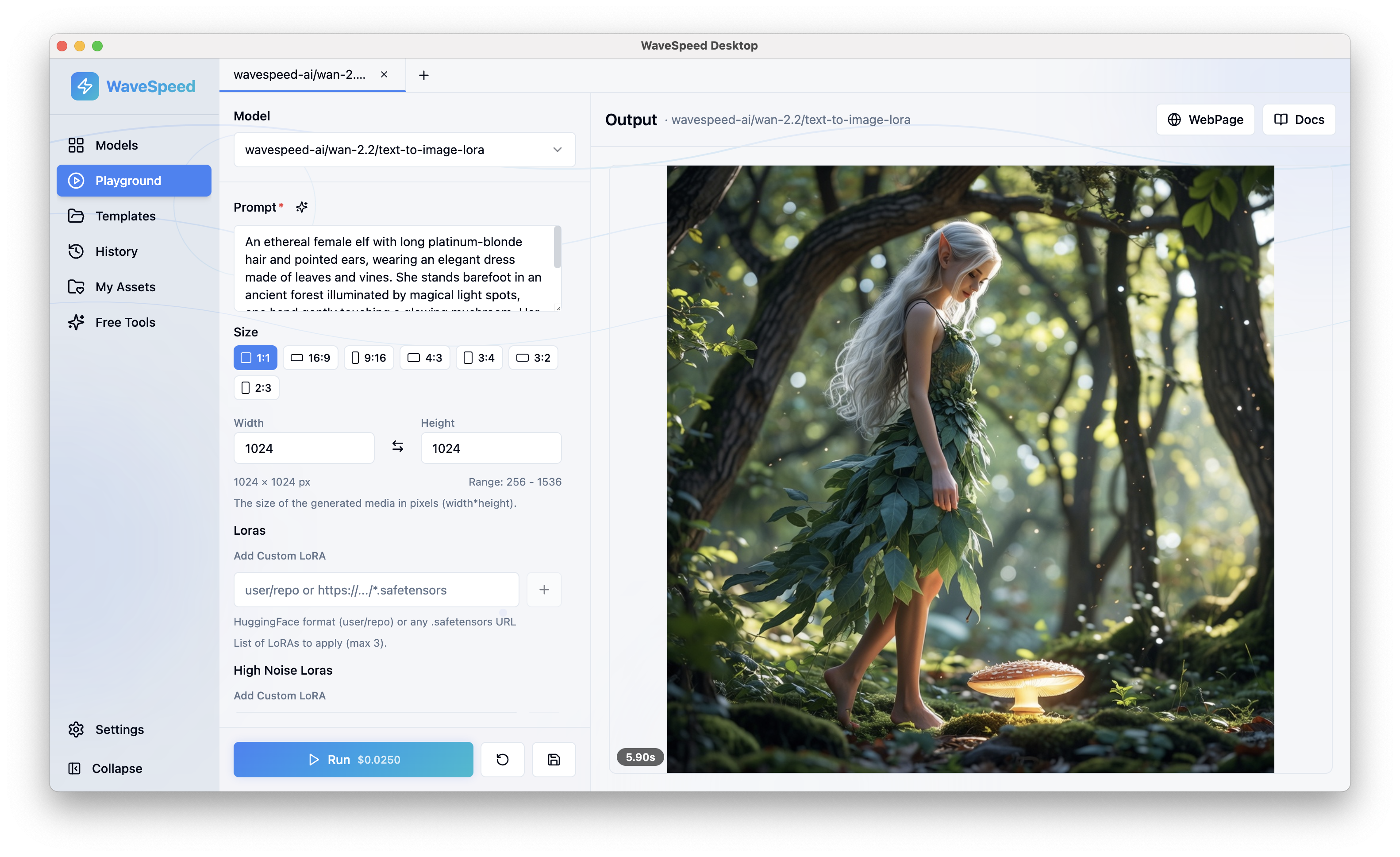

Using LTX-2 19B Text-to-Video LoRA on WaveSpeedAI is straightforward:

-

Craft Your Prompt: Write a detailed text description including scene details, actions, visual style, and any audio cues you want incorporated. The more specific your prompt, the better the model can interpret your creative vision.

-

Add LoRA Adapters: Use the ”+ Add Item” button to include up to three custom LoRA adapters. Each LoRA requires a URL to the weights file and accepts an optional scale parameter (0-4, default 1.0). Start with a scale of 1.0 and adjust based on results.

-

Configure Output Settings: Select your target resolution (480p, 720p, or 1080p) and aspect ratio (16:9 for landscape or 9:16 for vertical). Choose duration between 5-20 seconds—shorter durations are great for testing, while longer clips work for final renders.

-

Set Optional Parameters: Specify a seed value for reproducible results or leave it at -1 for random generation. This is particularly useful when iterating on prompts while keeping other variables constant.

-

Generate and Download: Submit your request and WaveSpeedAI’s infrastructure handles the rest—no cold starts, no waiting for containers to spin up. Your video generates quickly and is ready for download.

WaveSpeedAI’s implementation offers several advantages over running the model yourself: no GPU requirements, no model management, transparent pricing starting at $0.075 for 480p 5-second clips, and production-ready API access with consistent performance.

Try LTX-2 19B Text-to-Video LoRA on WaveSpeedAI today: https://wavespeed.ai/models/wavespeed-ai/ltx-2-19b/text-to-video-lora

Pro Tips for Best Results

Start Conservative with LoRA Scales: Begin with scale values around 1.0 and adjust incrementally. Too high a scale can overwhelm the base model’s capabilities, while values below 0.5 may not produce noticeable effects.

Test LoRA Combinations: When using multiple LoRAs simultaneously, test combinations carefully as they can interact in unexpected ways. A character LoRA combined with a style LoRA might produce different results than each applied separately.

Match LoRAs to Content: Use character LoRAs when generating content featuring specific people or animated characters, and style LoRAs for overall aesthetic control. Don’t try to make a style LoRA handle character consistency—use the right tool for each job.

Include Trigger Words: Many LoRAs are trained with specific trigger words or phrases that activate their effects. If your LoRA documentation mentions trigger words, be sure to include them in your prompts.

Leverage Automatic Audio: The model generates appropriate audio even when using custom visual styles, so describe both visual and auditory elements in your prompt for best results.

Pricing That Makes Sense

WaveSpeedAI offers transparent, usage-based pricing with no subscription fees:

- 480p: $0.075 per 5 seconds ($0.30 for 20 seconds)

- 720p: $0.10 per 5 seconds ($0.40 for 20 seconds)

- 1080p: $0.15 per 5 seconds ($0.60 for 20 seconds)

Pricing scales linearly with duration and adjusts based on resolution. The LoRA version carries a 25% premium over the standard model to account for the additional computational requirements of applying custom adapters, but delivers significantly more value through personalization capabilities.

The Technical Edge

The LTX-2 architecture represents a significant leap forward in video generation AI. Its 19-billion parameter Diffusion Transformer processes text prompts through sophisticated attention mechanisms that understand both spatial and temporal relationships. The model’s Video-VAE component achieves 1:192 compression with spatiotemporal downscaling of 32x32x8 pixels per token, enabling efficient processing without sacrificing quality.

Recent NVIDIA optimizations for LTX-2 deliver 3x faster performance with 60% VRAM reduction on RTX 50 Series GPUs using NVFP4 format, and 2x faster speeds with 40% VRAM reduction using NVFP8 quantization. While WaveSpeedAI handles all infrastructure for you, these optimizations mean faster generation times and lower costs as we continuously improve our backend systems.

Ready to Create?

LTX-2 19B Text-to-Video LoRA opens new creative possibilities for anyone working with AI-generated video content. Whether you’re a brand manager maintaining visual consistency, a content creator developing signature styles, an educator building course materials, or an artist exploring new creative frontiers, this model delivers the flexibility and quality needed for professional results.

Visit https://wavespeed.ai/models/wavespeed-ai/ltx-2-19b/text-to-video-lora to start generating personalized videos today. No GPU required, no cold starts, just fast, affordable, and consistent AI video generation with the creative control you need.

Related Articles

Google Veo 3.1 4K Update Brings Professional-Grade AI Video Generation

OpenAI Sora 3: What to Expect From the Next-Generation Video Model

Introducing WaveSpeedAI LTX 2 19b Image-to-Video LoRA on WaveSpeedAI

Introducing WaveSpeedAI LTX 2 19b Image-to-Video on WaveSpeedAI

Introducing WaveSpeedAI LTX 2 19b Text-to-Video on WaveSpeedAI