Introducing WaveSpeedAI Content Moderator Image on WaveSpeedAI

Try Wavespeed Ai Content Moderator Image for FREE

Introducing Image Content Moderator on WaveSpeedAI

Maintaining safe, policy-compliant digital spaces has become one of the most critical challenges facing platforms today. Whether you’re running a social media application, an e-commerce marketplace, or a community forum, the sheer volume of user-generated images makes manual moderation impossible at scale. That’s why we’re excited to announce the availability of Image Content Moderator on WaveSpeedAI—a powerful automated solution designed to detect and flag policy-violating or inappropriate images in real-time.

What is Image Content Moderator?

Image Content Moderator is an AI-powered moderation API that automatically analyzes images for potentially harmful, inappropriate, or policy-violating content. Built for production environments where speed and reliability are paramount, this model provides instant classification results that enable you to take corrective action before problematic content reaches your users.

The system works by analyzing visual elements within images and returning detailed moderation signals that indicate whether content violates common platform policies. This allows developers to implement automated filtering pipelines, trigger human review workflows for edge cases, or take immediate action on clearly violating content.

Key Features

- Real-Time Analysis: Get moderation results in milliseconds, enabling synchronous content filtering without degrading user experience

- Comprehensive Detection: Identifies multiple categories of policy-violating content including explicit material, violence, hate symbols, and other harmful imagery

- Production-Ready API: Simple REST endpoint integration means you can be up and running in minutes, not days

- Zero Cold Starts: WaveSpeedAI’s infrastructure ensures consistent low-latency responses regardless of request patterns or traffic spikes

- Scalable Architecture: Handle anywhere from a handful of images to millions per day without infrastructure concerns

- Actionable Outputs: Receive structured responses with confidence scores that enable nuanced policy enforcement

- Affordable Pricing: Pay only for what you use with transparent, predictable costs that scale with your business

Use Cases

Social Media and Community Platforms

User-generated content platforms face an endless stream of image uploads. Image Content Moderator can serve as your first line of defense, automatically screening every upload and routing potentially problematic content to human reviewers while allowing safe content through immediately. This hybrid approach maximizes both safety and user experience.

E-Commerce Marketplaces

Product listings with inappropriate imagery can damage your brand and violate marketplace policies. Integrate the moderation API into your seller onboarding flow to catch policy violations before listings go live, reducing the burden on trust and safety teams while maintaining marketplace integrity.

Dating and Social Apps

Platforms where users share personal photos have heightened moderation requirements. Automated image screening helps prevent the spread of explicit content, harassment imagery, or other materials that create unsafe environments, while preserving user privacy by minimizing human review of personal photos.

Content Creation Tools

If you’re building AI-powered image generation or editing tools, content moderation is essential for responsible deployment. Screen generated outputs before delivering them to users to ensure your creative tools aren’t being misused to produce harmful content.

Gaming and Virtual Worlds

User-created avatars, in-game screenshots, and shared media all require moderation in gaming environments. Real-time image analysis helps maintain community standards without interrupting gameplay or social interactions.

Enterprise Communications

Internal tools and enterprise platforms benefit from content moderation to maintain professional environments and ensure compliance with workplace policies. Automated screening reduces legal exposure while creating safer digital workspaces.

Getting Started

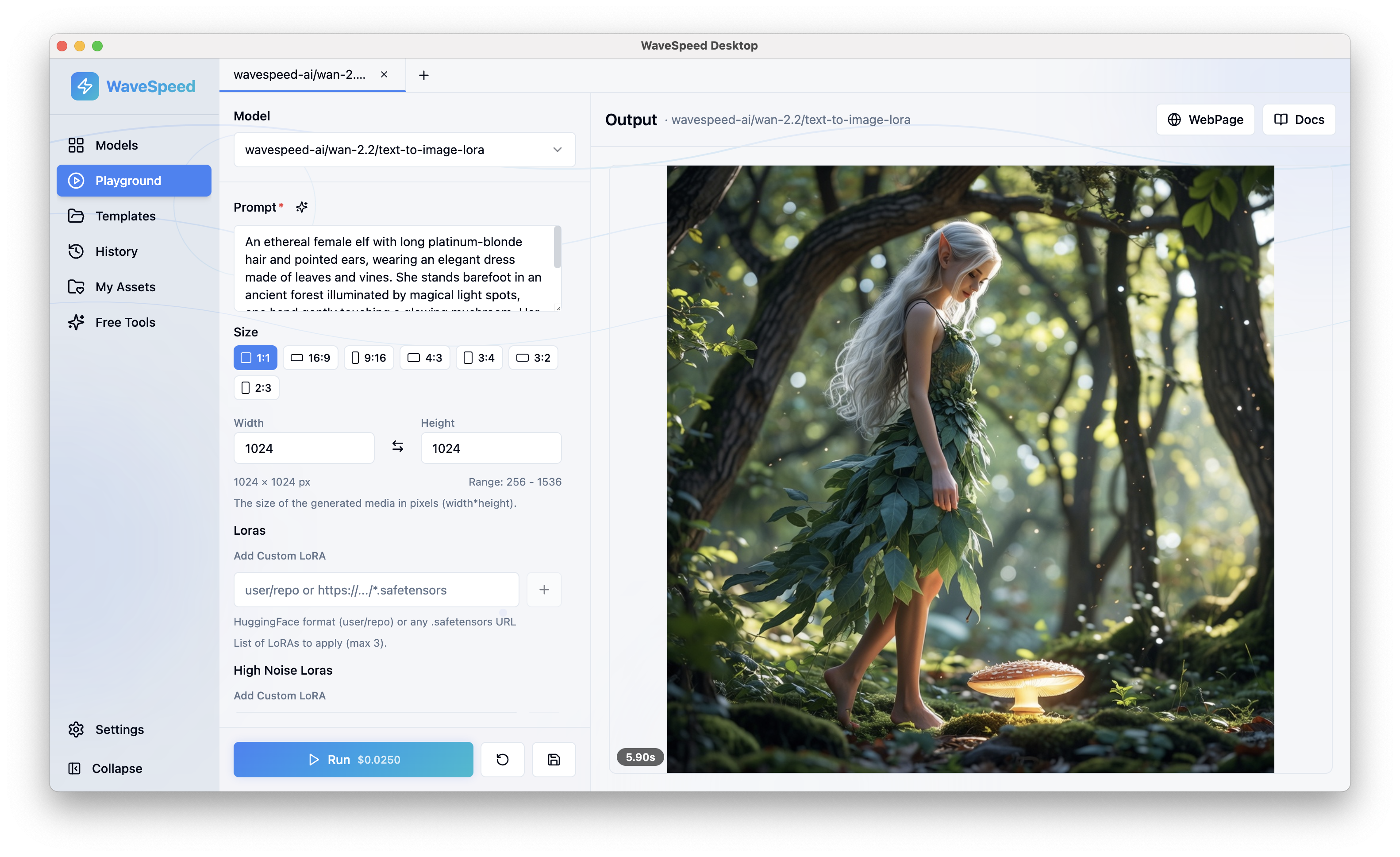

Integrating Image Content Moderator into your application takes just minutes with WaveSpeedAI’s straightforward REST API.

Quick Integration

- Sign up for a WaveSpeedAI account and obtain your API key

- Submit images to the moderation endpoint via URL or base64 encoding

- Parse the response to determine moderation actions based on your policies

- Implement your logic for filtering, flagging, or escalating content

Example Workflow

A typical integration might look like this:

- User uploads an image to your platform

- Before storing or displaying, send the image to Image Content Moderator

- Receive classification results with confidence scores

- If content is flagged, either reject the upload, queue for human review, or allow with restrictions

- Log moderation decisions for policy refinement and auditing

The API returns structured data that makes it easy to implement graduated responses—perhaps allowing borderline content with warnings while immediately blocking clearly violating material.

Why Choose WaveSpeedAI for Content Moderation?

Content moderation is a latency-sensitive application. Users expect instant feedback when uploading images, and any delay in the moderation pipeline directly impacts user experience. WaveSpeedAI’s infrastructure is specifically optimized for this challenge:

- No cold starts means the first request of the day is just as fast as the millionth

- Consistent low latency enables synchronous moderation without blocking user flows

- High availability ensures your moderation pipeline never becomes a single point of failure

- Transparent pricing lets you budget accurately without surprise costs

Conclusion

Building safe online spaces requires robust, scalable content moderation—and Image Content Moderator on WaveSpeedAI delivers exactly that. With real-time analysis, comprehensive detection capabilities, and production-ready reliability, you can protect your platform and users without sacrificing performance or breaking the budget.

Whether you’re launching a new platform or scaling an existing one, automated image moderation is no longer optional—it’s essential infrastructure. Let WaveSpeedAI handle the complexity of AI inference so you can focus on building great products.

Ready to implement automated image moderation? Try Image Content Moderator on WaveSpeedAI and start protecting your platform today.