Introducing WaveSpeedAI Any Llm Vision on WaveSpeedAI

Try Wavespeed Ai Any Llm Vision for FREE

Introducing Any Vision LLM: Unified Access to the World’s Best Multimodal AI Models

The landscape of AI has evolved dramatically with vision-language models (VLMs) becoming essential tools for businesses and developers worldwide. Today, WaveSpeedAI introduces Any Vision LLM—a revolutionary gateway that gives you instant access to a curated catalog of the world’s most powerful multimodal models, all through a single, unified API powered by OpenRouter.

No more juggling multiple API keys. No more switching between providers. Just one endpoint to access GPT-4o, Claude 3.5, Gemini 2.5, Qwen3-VL, Llama 4, and dozens of other cutting-edge vision-language models.

What is Any Vision LLM?

Any Vision LLM is WaveSpeedAI’s flexible multimodal inference solution that connects you to an extensive catalog of vision-language models. Powered by OpenRouter’s robust infrastructure, this service allows you to seamlessly switch between different VLMs based on your specific use case—whether you need GPT-4o’s scientific reasoning, Qwen3-VL’s document understanding, or Gemini 2.5 Pro’s versatile multimodal capabilities.

The 2025 VLM landscape is more competitive than ever. Open-source models like Qwen2.5-VL-72B now perform within 5-10% of proprietary models, while newer releases like Llama 4 Maverick offer 1 million token context windows. With Any Vision LLM, you gain access to this entire ecosystem without the complexity of managing multiple integrations.

Key Features

Unified API Access

- Single endpoint for all vision-language models in the catalog

- OpenAI-compatible interface for seamless integration with existing workflows

- Automatic model routing based on your requirements

Extensive Model Catalog

Access leading VLMs including:

- GPT-4o — 59.9% accuracy on MMMU-Pro benchmarks, excellent for scientific reasoning

- Claude 3.5 Sonnet — Handles complex layouts across 200,000-token contexts

- Gemini 2.5 Pro — Currently leading LMArena leaderboards for vision and coding

- Qwen3-VL — Native 256K context, expandable to 1M tokens, with agentic capabilities

- Llama 4 Maverick — 17B active parameters with 1 million token context window

- Open-source options — Qwen2.5-VL, InternVL3, Molmo, and more

Production-Ready Infrastructure

- No cold starts — Models are always warm and ready

- Fast inference — Optimized for low-latency responses

- Affordable pricing — Pay only for what you use

- 99.9% uptime — Enterprise-grade reliability

Flexible Multimodal Input

- Process images, screenshots, documents, and charts

- Handle multi-image conversations

- Support for PDFs and complex visual layouts

- Multilingual OCR across 30+ languages

Real-World Use Cases

Document Intelligence & OCR

Extract structured data from invoices, contracts, and forms. Qwen3-VL’s advanced document comprehension handles scientific visual analysis, diagram interpretation, and multilingual OCR with exceptional accuracy. Process thousands of documents without manual data entry.

Customer Support Automation

Build support agents that understand screenshots, error messages, and product images. When users share a photo of a malfunctioning device, your AI can identify components, diagnose issues, and provide step-by-step solutions—all in a single interaction.

E-Commerce & Visual Search

Enhance product discovery with image-based search and recommendations. Organizations using multimodal visual search have seen product page click-through rates improve by 14.2% and add-to-cart rates increase by 8.1%.

Content Moderation & Analysis

Automatically review user-generated content across images and text. Detect policy violations, assess quality, and categorize content at scale with models that understand context and nuance.

Medical & Healthcare Applications

Support clinical workflows by combining medical images with patient notes. VLMs can analyze X-rays, interpret lab results, and assist with diagnostic suggestions—always under physician oversight.

Software Development & UI Assistance

Turn sketches and mockups into code. Qwen3-VL and similar models can interpret UI designs, debug visual interfaces, and assist with software development workflows where screenshots need rapid interpretation.

Field Operations & Maintenance

Empower frontline workers with real-time visual assistance. When technicians photograph equipment issues, multimodal AI can identify parts, annotate problems, retrieve manuals, and guide repairs instantly.

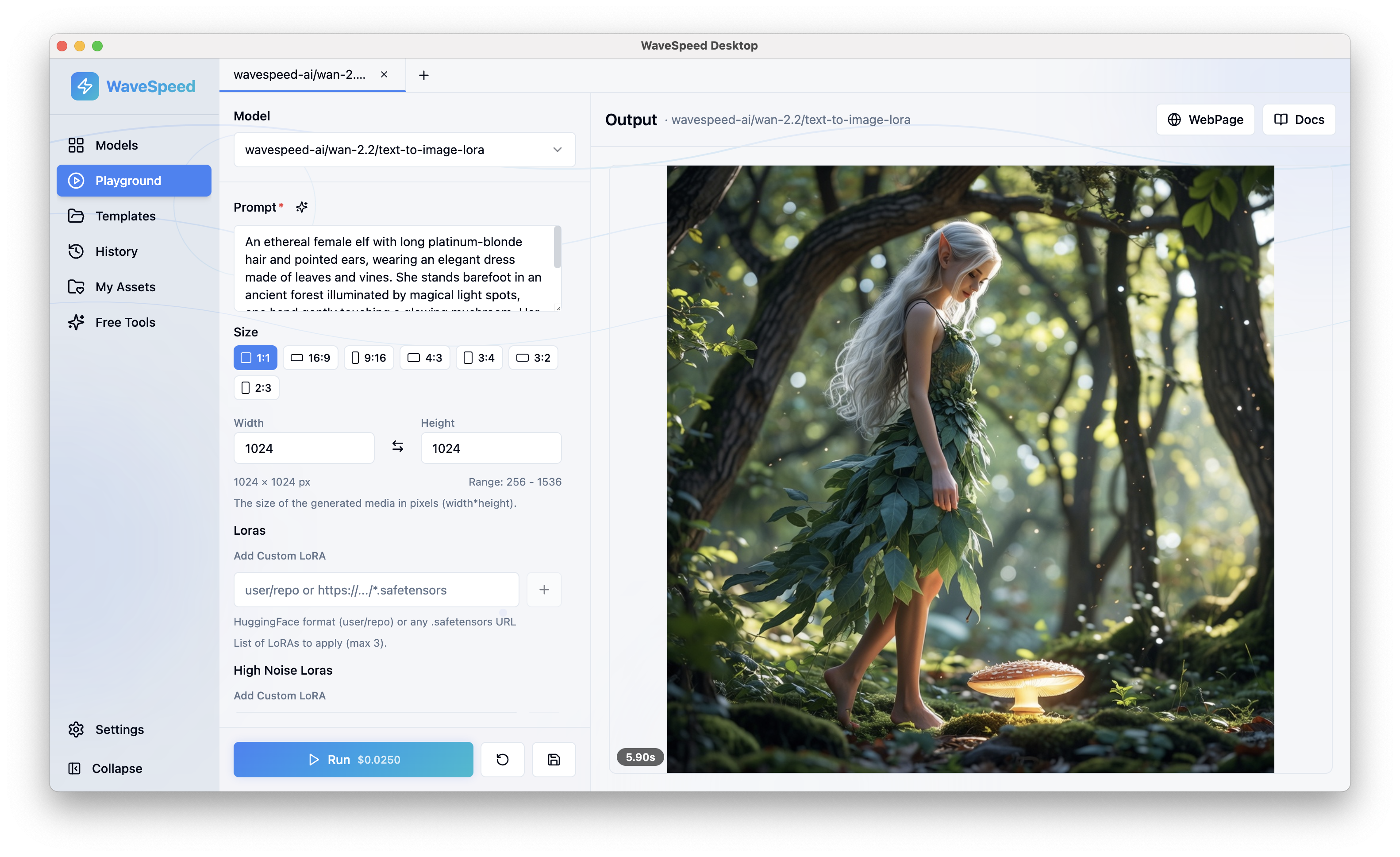

Getting Started with WaveSpeedAI

Integrating Any Vision LLM into your application takes minutes:

1. Get Your API Key

Sign up at WaveSpeedAI and generate your API credentials from the dashboard.

2. Make Your First Request

Use our OpenAI-compatible endpoint to send images and text:

import wavespeed

output = wavespeed.run(

"wavespeed-ai/any-llm/vision",

{

"messages": [

{

"role": "user",

"content": [

{"type": "text", "text": "What's in this image?"},

{"type": "image_url", "image_url": {"url": "https://..."}},

],

}

],

},

)

print(output["outputs"][0]) # Response text3. Choose Your Model

Specify which VLM to use based on your requirements—whether you need maximum accuracy, fastest response, or cost optimization.

Why Choose WaveSpeedAI for Multimodal Inference?

Performance Without Compromise Our infrastructure is optimized for multimodal workloads. Techniques like FP8 quantization deliver up to 2-3x speed improvements while maintaining model quality.

Flexibility at Scale Switch between models without code changes. Test GPT-4o for accuracy, then deploy with an open-source alternative for cost efficiency—all through the same API.

Enterprise-Ready With 99.9% uptime, comprehensive logging, and usage analytics, WaveSpeedAI is built for production workloads. No cold starts mean your applications respond instantly, every time.

Cost-Effective Avoid the infrastructure costs of self-hosting multiple VLMs. Pay per request with transparent pricing and no hidden fees.

The Future of Multimodal AI is Here

The gap between proprietary and open-source VLMs is closing rapidly. Models like Qwen3-VL now rival GPT-4o and Gemini 2.5 Pro across benchmarks, while lightweight options like Phi-4 bring multimodal capabilities to edge devices.

With Any Vision LLM on WaveSpeedAI, you’re not locked into a single model or provider. As the VLM landscape evolves, your applications automatically gain access to the latest and best models—no migrations required.

Start Building Today

Ready to add powerful vision-language capabilities to your applications? Any Vision LLM gives you instant access to the world’s best multimodal models through a single, reliable API.

Try Any Vision LLM on WaveSpeedAI →

Join thousands of developers who trust WaveSpeedAI for fast, affordable, and reliable AI inference. No cold starts. No complexity. Just results.