Introducing ByteDance Latentsync on WaveSpeedAI

Try Bytedance Latentsync for FREEIntroducing ByteDance LatentSync on WaveSpeedAI: The Future of AI-Powered Lip Synchronization

The world of AI video generation has just taken a massive leap forward. We’re thrilled to announce that ByteDance LatentSync is now available on WaveSpeedAI, bringing state-of-the-art lip synchronization technology to creators, studios, and developers worldwide. Whether you’re dubbing content for global audiences, creating virtual avatars, or producing educational videos, LatentSync delivers the most realistic and temporally consistent lip-sync results available today.

What is ByteDance LatentSync?

LatentSync represents a fundamental breakthrough in how AI approaches lip synchronization. Unlike traditional methods that rely on intermediate motion representations or two-stage generation pipelines, LatentSync is an end-to-end framework built on audio-conditioned latent diffusion models.

At its core, LatentSync harnesses the powerful capabilities of Stable Diffusion to directly model complex audio-visual correlations. The system uses OpenAI’s Whisper model to convert speech into rich audio embeddings, which are then integrated into the U-Net architecture through cross-attention layers. This direct approach eliminates the artifacts and quality loss that typically occur when translating between intermediate representations.

What truly sets LatentSync apart is its innovative TREPA (Temporal REPresentation Alignment) mechanism—a novel technique developed by ByteDance researchers to solve one of the most persistent challenges in diffusion-based video generation: temporal consistency.

Key Features and Capabilities

End-to-End Diffusion Architecture

LatentSync bypasses the need for intermediate motion representations entirely. By leveraging latent-space diffusion, the model generates natural, smooth lip movements that seamlessly match any input audio. This approach delivers superior visual quality compared to pixel-space diffusion methods.

TREPA for Temporal Consistency

Diffusion models have historically struggled with flickering artifacts—particularly visible in high-frequency details like teeth, lips, and facial hair. TREPA addresses this by aligning temporal representations extracted from large-scale self-supervised video models (specifically VideoMAE-v2) between generated and ground truth frames. The result is remarkably stable video output that eliminates the distracting inconsistencies common in other solutions.

Industry-Leading Accuracy

LatentSync achieves 94% accuracy on both the HDTF and VoxCeleb2 benchmark datasets, surpassing state-of-the-art lip-sync approaches across multiple evaluation metrics. This precision translates directly into more believable results for your projects.

Multi-Format Support

The WaveSpeedAI endpoint supports MP4 video input and accepts audio in MP3, AAC, WAV, and M4A formats—covering virtually all common media workflows without additional conversion steps.

Universal Character Support

From photorealistic human faces to animated characters and anime-style visuals, LatentSync adapts its algorithms to ensure accurate lip synchronization across different visual styles. This versatility opens up possibilities for entertainment, gaming, and creative applications alike.

High-Resolution Output

With the release of LatentSync 1.6, the model now trains on 512×512 resolution videos, effectively eliminating the blurriness issues that plagued earlier versions. Your output maintains the crisp, professional quality that modern content demands.

Real-World Use Cases

Film Dubbing and Localization

Transform your content for global audiences without costly reshoots. LatentSync enables studios to dub movies, TV shows, and documentaries into any language while maintaining perfect lip synchronization. International distributors can deliver a native viewing experience that feels authentic to every market.

Content Creation and Social Media

YouTube creators, TikTok influencers, and social media managers can produce multilingual content at scale. Repurpose a single video into dozens of language versions, each with precise lip movements matching the localized audio.

Educational Content

E-learning platforms can create instructor-led courses that speak directly to students in their native language. The precise synchronization ensures that educational videos maintain their professional appearance and pedagogical effectiveness across all localizations.

Virtual Avatars and Digital Humans

Game developers and virtual production teams can bring NPCs, virtual spokespersons, and digital humans to life with natural speech patterns. LatentSync makes avatar-based communication more immersive and believable than ever before.

Corporate Communications

Produce personalized video messages, training materials, and executive communications at scale. Generate multiple language versions of promotional content while maintaining the authentic presence of your speakers.

Advertising and Marketing

Create localized ad campaigns that resonate with regional audiences. Virtual spokespersons can deliver your message in any language with the natural lip movements that build trust and engagement.

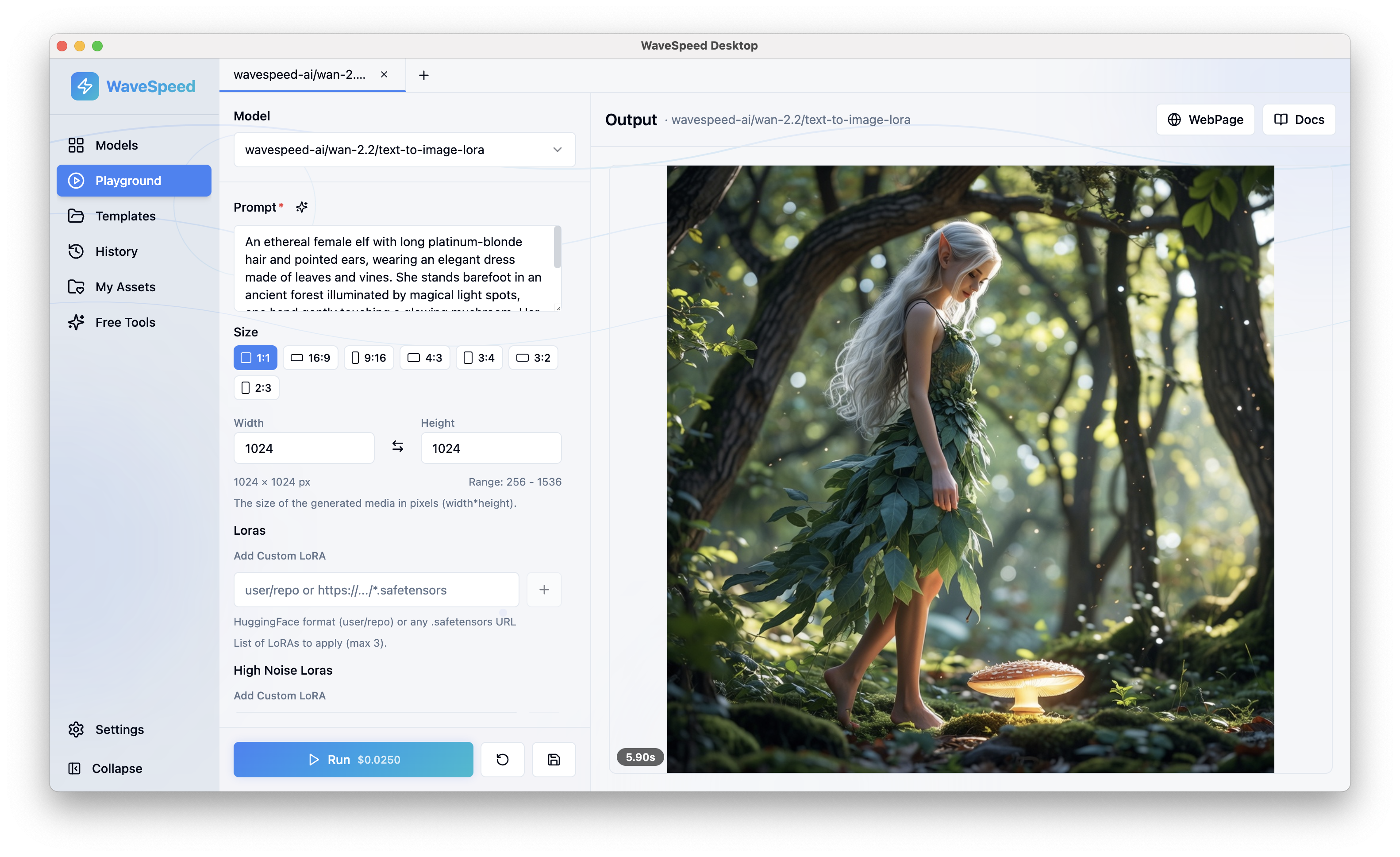

Getting Started on WaveSpeedAI

Using LatentSync through WaveSpeedAI couldn’t be simpler. Our REST API provides instant access to ByteDance’s powerful lip-sync technology with the performance and reliability your production workflows demand.

Why choose WaveSpeedAI for LatentSync?

-

No Cold Starts: Our infrastructure keeps models warm and ready, so you never wait for initialization. Your requests begin processing immediately.

-

Best-in-Class Performance: WaveSpeedAI’s optimized inference pipeline delivers results faster than self-hosted alternatives, without the complexity of managing GPU infrastructure.

-

Affordable Pricing: Pay only for what you use, with transparent pricing that scales with your needs. No minimum commitments or hidden fees.

-

Simple Integration: A clean REST API means you can integrate LatentSync into your existing workflows in minutes. Upload your video, provide your audio, and receive perfectly synchronized results.

To get started, simply visit LatentSync on WaveSpeedAI, explore the API documentation, and begin generating professional-grade lip-synced content today.

The Bottom Line

ByteDance LatentSync represents a genuine advancement in AI lip synchronization technology. By combining the generative power of Stable Diffusion with the temporal consistency innovations of TREPA, it delivers results that were simply not possible with previous approaches. The 94% benchmark accuracy, support for both real and animated faces, and elimination of temporal flickering make it the most capable open-source lip-sync solution available.

Now, with LatentSync available on WaveSpeedAI, you can access this cutting-edge technology through a fast, reliable API with no infrastructure headaches. Whether you’re localizing content for millions of viewers or creating the next generation of virtual experiences, LatentSync provides the foundation for lip synchronization that truly convinces.

Ready to transform your video content? Try ByteDance LatentSync on WaveSpeedAI today and experience the future of AI-powered lip synchronization.