Introducing ByteDance Avatar Omni Human 1.5 on WaveSpeedAI

Try Bytedance Avatar Omni Human.1.5 for FREEIntroducing ByteDance Avatar OmniHuman 1.5: The Future of AI-Powered Digital Humans

The line between human and digital has never been thinner. ByteDance’s OmniHuman 1.5 represents a quantum leap in avatar animation technology, transforming static images into living, breathing digital humans that don’t just move—they think, react, and express genuine emotion. Now available on WaveSpeedAI, this revolutionary model is changing what’s possible in virtual human creation.

What is OmniHuman 1.5?

OmniHuman 1.5 is an advanced vision-audio fusion model that animates avatars through cognitive and emotional simulation. Unlike traditional lip-sync tools that simply match mouth movements to audio, OmniHuman 1.5 goes far deeper—it understands the semantic content and emotional context of speech, generating natural facial expressions, synchronized lip movements, and realistic emotional responses that truly match what’s being said.

The technology is built on a groundbreaking dual-system architecture inspired by cognitive science’s “System 1 and System 2” theory. This means the model simulates both fast, intuitive reactions and slow, deliberate planning—mirroring how the human mind actually works. The result? Digital humans that demonstrate contextually appropriate gestures, natural pauses, and emotional expressions that align perfectly with spoken content.

When your audio mentions a “heartfelt confession,” OmniHuman 1.5 doesn’t just move the lips—it generates expressions and body language that naturally reflect sincere emotion. This semantic understanding sets it apart from every other avatar animation tool on the market.

Key Features

Audio-Driven Realism with Cognitive Depth OmniHuman 1.5 generates precise lip-sync and emotional nuance directly from voice input, but goes beyond simple audio matching. The model leverages Multimodal Large Language Models to synthesize structured representations that provide high-level semantic guidance, enabling contextually and emotionally resonant actions.

Expressive Cognitive Simulation The model creates subtle eye movements, micro-expressions, and reactive behaviors that emulate genuine human presence. Human evaluators consistently prefer OmniHuman 1.5 for naturalness, plausibility, and semantic alignment over competing solutions.

Universal Avatar Adaptation Works seamlessly with any static portrait or illustration—realistic photographs, anime characters, illustrated portraits, and artistic renderings. Whether you’re creating a corporate AI spokesperson or an anime AI influencer, OmniHuman 1.5 adapts to your visual style perfectly.

Extended Generation Capabilities Generate videos over one minute long with highly dynamic motion, continuous camera movement, and complex multi-character interactions. The model supports prompt control for camera movements, object generation, and specific actions.

Cross-Domain Versatility OmniHuman 1.5 handles both photorealistic and stylized avatars, adapting its realism to match the visual style. It works across humans, animals, anthropomorphic figures, and stylized cartoons.

Flexible Integration Options Choose between URL output or BASE64 encoding for seamless API integration into your applications and workflows.

Real-World Use Cases

Digital Avatars and VTubing Drive realistic avatars from real voices with natural expressions and body language. Content creators can build engaging virtual personas that respond authentically to their voice, complete with appropriate emotional reactions and gestures.

Virtual Humans and NPCs Give game characters and metaverse inhabitants believable cognitive reactions. OmniHuman 1.5 enables NPCs that don’t just recite dialogue—they express it with natural human-like presence, dramatically improving player immersion.

Marketing and Storytelling Create expressive digital spokespeople and narrators for brand campaigns. The model acts as an “AI director,” producing cinematic, personalized video content that previously required large production teams and substantial budgets.

AI Companions and Education Build avatars that engage naturally in learning contexts and dialogue situations. Educational platforms can create virtual instructors who respond with appropriate emotion and expression, making learning more engaging and personal.

Accessibility Solutions Generate sign language avatars or visual communication aids that convey emotion alongside information, creating more inclusive digital experiences.

Independent Content Production Smaller studios and independent creators can now produce content that previously required larger teams. OmniHuman 1.5 significantly reduces the quality gap between large studio productions and independent content creators.

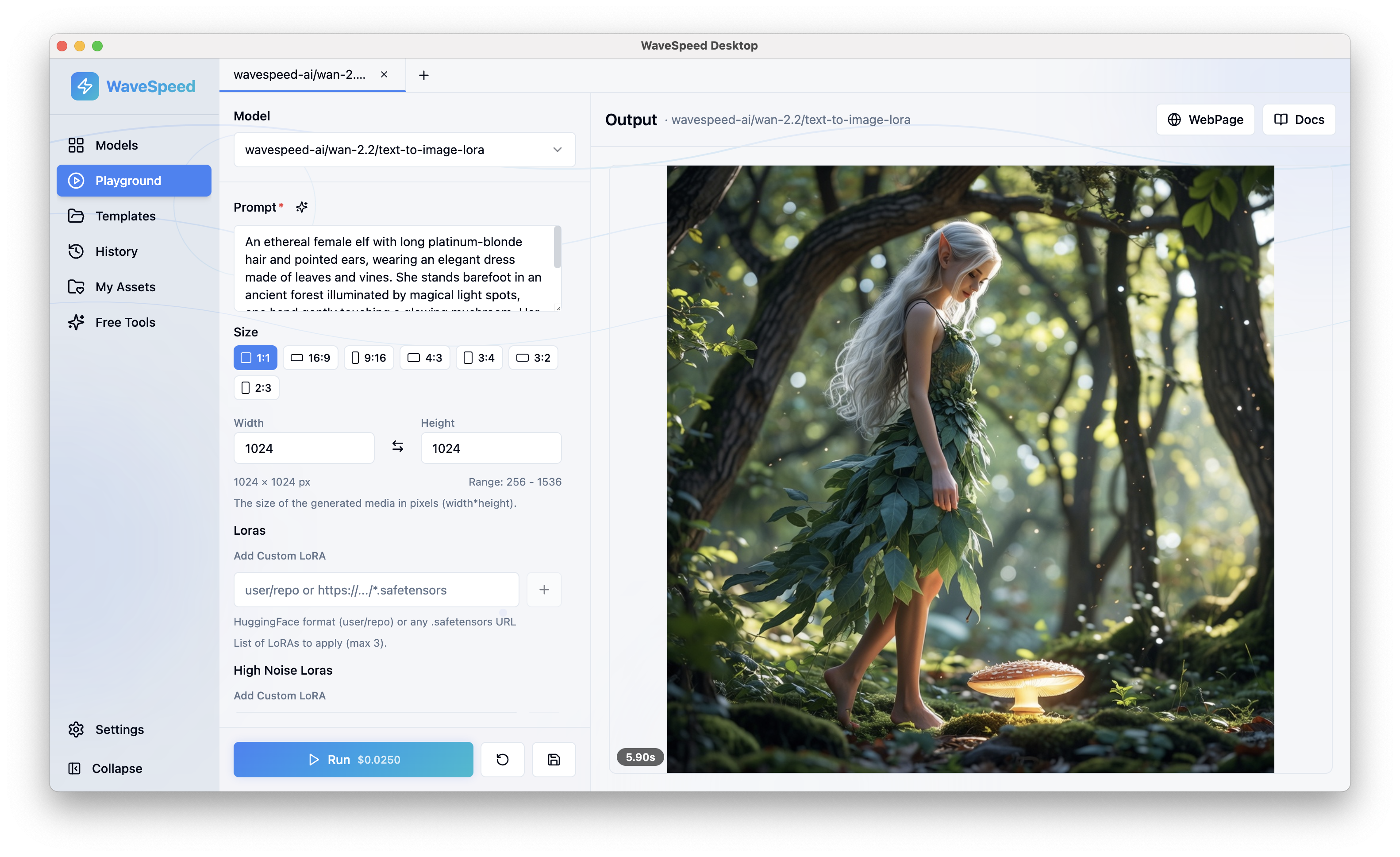

Getting Started on WaveSpeedAI

Using OmniHuman 1.5 on WaveSpeedAI is straightforward:

-

Prepare Your Assets: Upload a reference portrait or character image (JPG/PNG) and an audio file (WAV/MP3) for lip-sync and emotion mapping. For best results, use clear, high-quality audio and well-lit frontal images.

-

Call the API: WaveSpeedAI provides a ready-to-use REST inference API. Simply send your image and audio to the endpoint, and receive your animated avatar video.

-

Integrate Seamlessly: Choose URL output for direct linking or BASE64 encoding for embedding directly into web applications.

Pricing That Makes Sense

OmniHuman 1.5 on WaveSpeedAI is priced at $0.25 per second of generated video—making professional-quality avatar animation accessible for projects of any size. With no cold starts and consistently fast inference, you can iterate quickly without waiting or paying for idle resources.

Why WaveSpeedAI?

- No Cold Starts: Your API calls execute immediately, every time

- Fast Inference: Get results quickly without sacrificing quality

- Affordable Pricing: Pay only for what you generate

- Simple Integration: Clean REST API that works with any stack

- Reliable Performance: Consistent, production-ready infrastructure

Conclusion

OmniHuman 1.5 represents a fundamental shift in avatar animation technology. By instilling cognitive simulation into digital humans, ByteDance has created a model that produces avatars with genuine presence—characters that understand what they’re saying and react accordingly.

For content creators, marketers, game developers, and enterprises building virtual human experiences, OmniHuman 1.5 delivers unprecedented quality and expressiveness. The combination of semantic understanding, emotional authenticity, and universal style adaptation makes this the most capable avatar animation model available today.

Ready to bring your digital humans to life? Try OmniHuman 1.5 on WaveSpeedAI and experience the future of AI-powered avatar animation.