Stop Training, Start Creating: Use LoRA on WaveSpeedAI

Introduction

What is LoRA? Think of it as a lightweight fine-tuning method: instead of retraining the entire model, you can simply add a small “fast-adaptation” layer to an existing one to lock in your own style — faster and cheaper.

In this tutorial, we’ll start from zero, show you how to find LoRA models you love online, and use them in WaveSpeedAI. Even if you’re new, you’ll be up and running in no time.

Model Selection

When creating images and videos with AIGC, we usually can only control the model through prompts, making it difficult to manage fine details. If you depend on the model to “understand on its own” things like hand poses, fabric folds, or clothing elements, the results are often unsatisfactory.

At this point, you can explore open platforms to find LoRA models shared by creators. From overall art style and camera texture to specific poses, outfits, and tiny accessories. Targeted LoRAs can enhance details and give you more control — without retraining a model.

However, please remember an important rule when selecting a LoRA: it must exactly match the AIGC base model . You use — same model name, same version, and same parameter size.

For example, a LoRA designed for Wan 2.2 cannot be used on Wan 2.1 or any other model. Similarly, a Wan 2.2 14B LoRA cannot be used on Wan 2.2 5B.

If these don’t match, the style might shift at best. At worst, you could encounter errors. Always double-check the information on the model page before using it!

Double Check the Version and Parameters

Double Check the Version and Parameters

**P.S. **On WaveSpeedAI, LoRAs run from a single .safetensors file. Just import it and you’re set. Avoid .PickleTensor, .zip, .GGUF, etc., because WaveSpeedAI doesn’t support those formats.

Watch the file size. LoRAs are usually under 2 GB (often just a few hundred MB). If your upload is significantly larger, you may select the wrong file (such as the complete base model or a zipped bundle), and the import will fail. Double-check the filename and extension before trying again!

Here are two commonly used platforms: Civitai and Hugging Face.

Civitai Platform

Civitai Platform

Hugging Face Platform

Hugging Face Platform

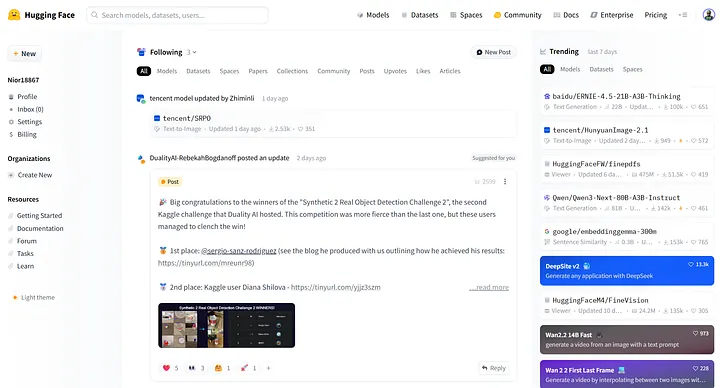

LoRA on Hugging Face

Hugging Face is one of the world’s largest open-source model hubs, offering a vast catalog of models and datasets. You can search for LoRAs and find official weights and inference guides for popular base models.

In this part, we’ll focus on LoRA — how to locate it, select it on Hugging Face, and use it on WaveSpeedAI.

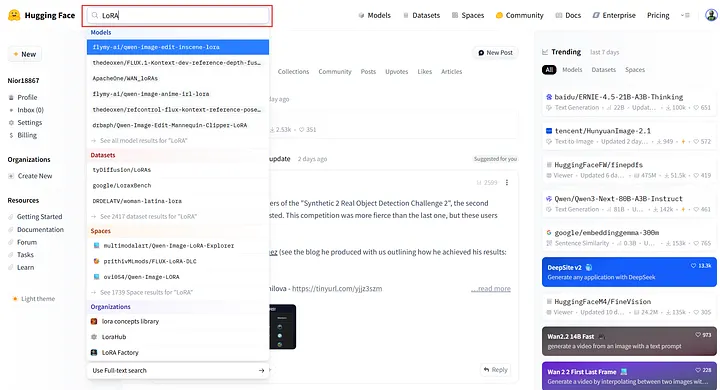

Begin by typing LoRA into the search bar at the top of the site to view related repositories.

Search for LoRA

Search for LoRA

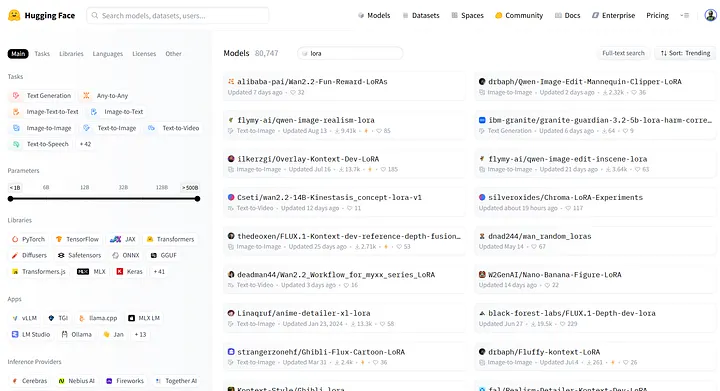

Next, click See all model results for “LoRA” to view the full LoRA results page.

For your own searches, include qualifiers like the base model name, version, and parameter size (e.g., 7B/14B). This narrows the search and shows more relevant results.

Model Results Page

Model Results Page

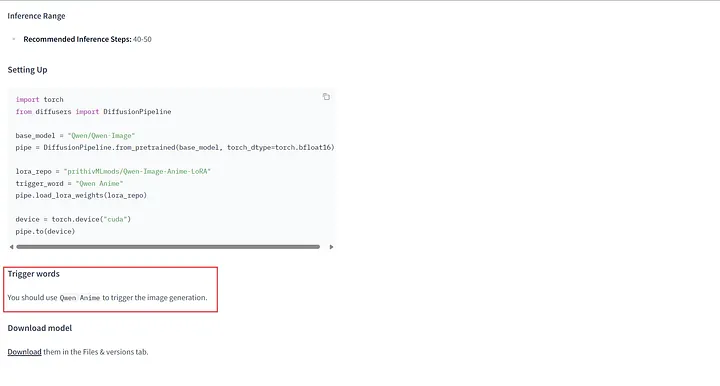

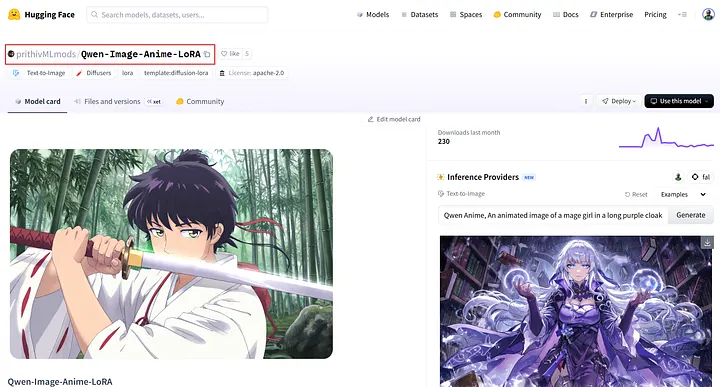

On Hugging Face, LoRA models usually specify the compatible base model and parameter size in the title or description.

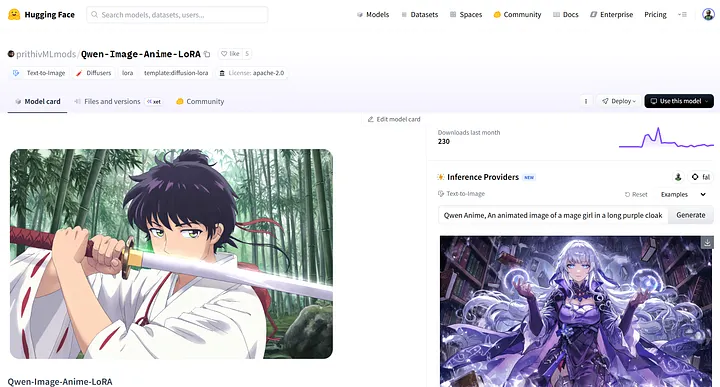

For example, prithivMLmods/Qwen-Image-Anime-LoRA is a LoRA created for Qwen-Image and used to generate Japanese anime–style images.

prithivMLmods/Qwen-Image-Anime-LoRA

prithivMLmods/Qwen-Image-Anime-LoRA

As shown on the page, Qwen-Image-Anime-LoRA is published by prithivMLmods and is specifically designed for the Qwen-Image base model.

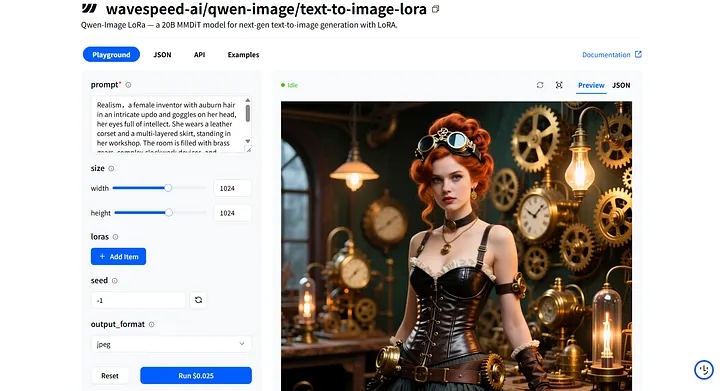

Next, switch to WaveSpeedAI and open the wavespeed-ai/qwen-image/text-to-image-lora model. We’ll use it to load and run this LoRA.

wavespeed-ai/qwen-image/text-to-image-lora

wavespeed-ai/qwen-image/text-to-image-lora

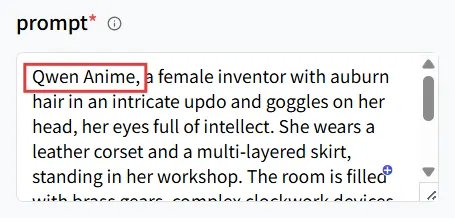

On the model’s Playground page, you’ll find the prompt input field to enter your prompt, along with the loras section for adding a LoRA model.

When writing your prompt, besides clearly describing the scene, style, and details you want, remember to include the LoRA’s trigger word! You can usually find this information on the Hugging Face page in the Model Card.

For instance, on prithivMLmods/Qwen-Image-Anime-LoRA model page, scroll down the Model Card to find additional details, such as how to use the model and the exact trigger word required.

Trigger words in Model card

Trigger words in Model card

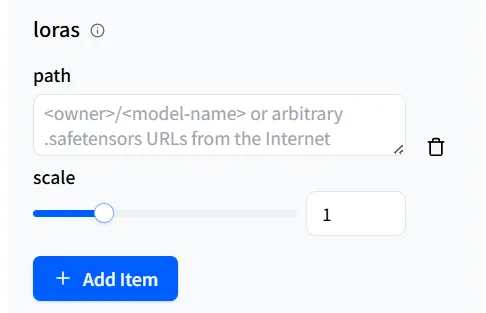

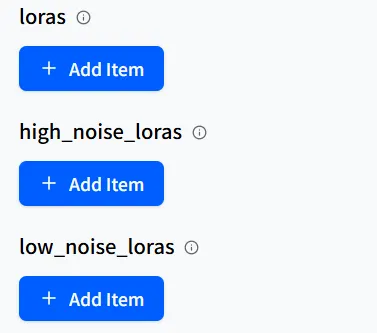

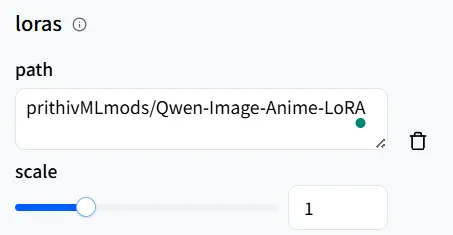

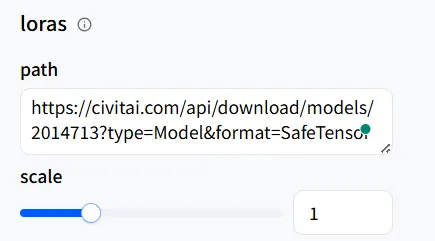

Afterward, we’ll modify the parameters related to the LoRA model.

First is the path. This is the route WaveSpeedAI uses to call the LoRA model you want.

Click + Add Item to reveal an input field. The qwen-image/text-to-image-lora pipeline allows adding up to three LoRA models.

Additionally, if the LoRA model is hosted on Hugging Face, WaveSpeedAI provides two ways to reference it: one is <owner>/<model-name>. Just like this example, the author’s name plus the model name as shown on the model page.

Copy this and paste in the path!

Copy this and paste in the path!

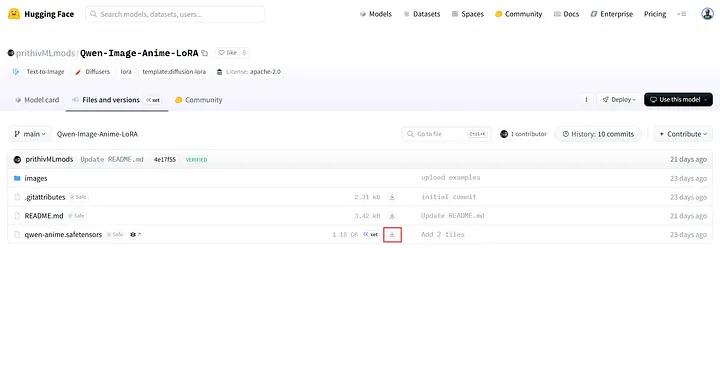

Another method is to go to the model’s Files and versions, right-click the download icon, select Copy link address, and paste the copied URL into the path.

Download Button in Files and versions

Download Button in Files and versions

Sometimes you may see high-noise LoRA and low-noise LoRA options on the model page. These are generally not commonly used, but Hugging Face usually provides detailed information about them.

Simply fill in the LoRA model with the matching name in the appropriate field as you would with a normal LoRA, and it will work well.

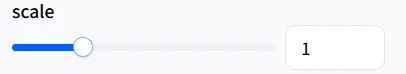

In the loras parameter settings, there is a slider called scale, which you can think of as an “influence/concentration” volume knob. It adjusts how strongly the LoRA affects the base model.

In most cases, the default value 1 will give you good results. If the result varies from your expectations, you can slightly increase the scale.

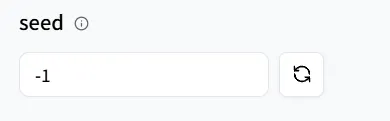

Seed is used to control randomness. Think of it as a “starting index.”

When you use the same seed and then adjust the prompt, the overall style and composition will remain mostly consistent. Only the parts you changed in the prompt will be different, making comparison and reproduction easier.

Great! You’ve completed all the prep work! Let’s begin using the LoRA model!

In the prompt field, first enter the trigger word Qwen Anime for the LoRA model. Then provide the description of the result you want to generate.

Input the trigger word

Input the trigger word

Then, in loras field, in the path, enter prithivMLmods/Qwen-Image-Anime-LoRA or its URL, and keep the scale at 1.

Set the path

Set the path

Then set the seed so you can easily reproduce any results you want later.

Random seed number

Random seed number

Finally, click the Run button to generate an anime-style image!

The Result

The Result

Since we’ve already set a seed earlier, if you’re happy with the background and style details (such as clothing) but want to change the character’s gender, just edit the prompt and click Run again.

You know, I just wanted to compare the results

You know, I just wanted to compare the results

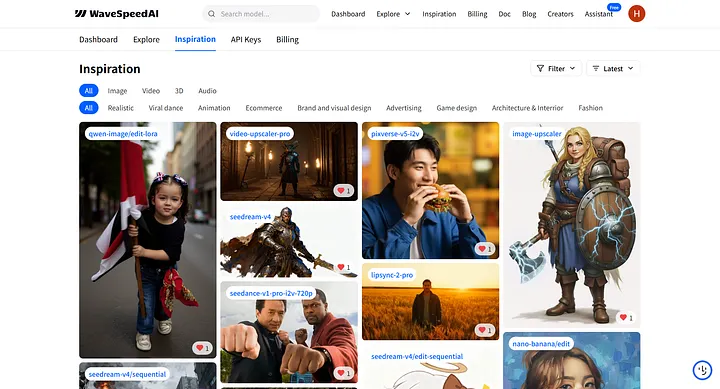

How does it look? Can you see the changes? Try it out yourself! WaveSpeedAI has many base models that can be called LoRA. Feel free to experiment, then share your work in Inspiration with us and the broader creator community!

Inspiration Page

Inspiration Page

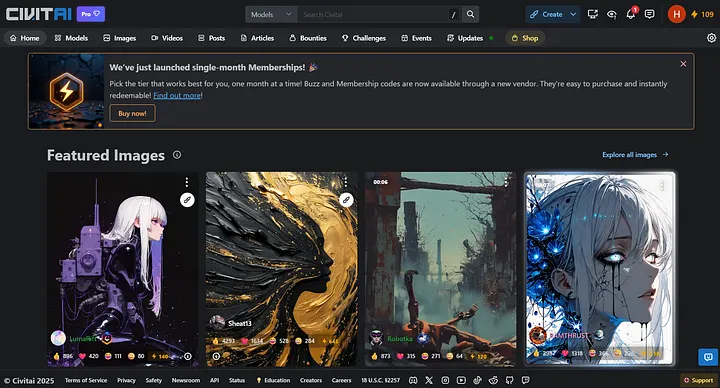

LoRA on Civitai

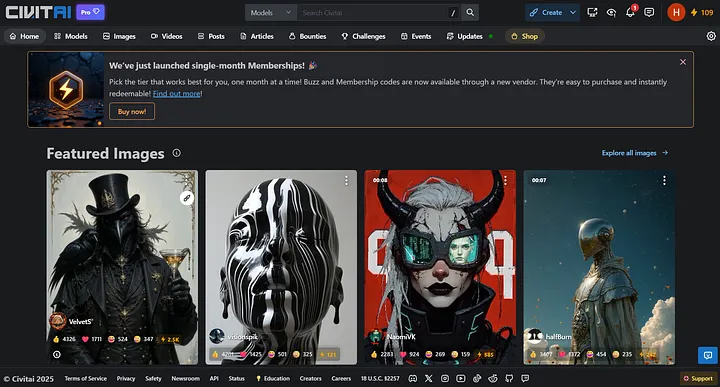

Civitai is a community focused on creators that shares models, featuring a wide variety of LoRA resources. You can search by style or theme, browse example results and parameters, and quickly find a suitable model.

Civitai Page

Civitai Page

The search method on Civitai is similar to Hugging Face: enter details like the model version and parameter size into the search box. Add the keyword “LoRA” to quickly filter a large number of relevant models (for example: “Wan 2.2 14B LoRA”).

The basic usage is similar to calling models on Hugging Face, so we will only explain the differences in detail.

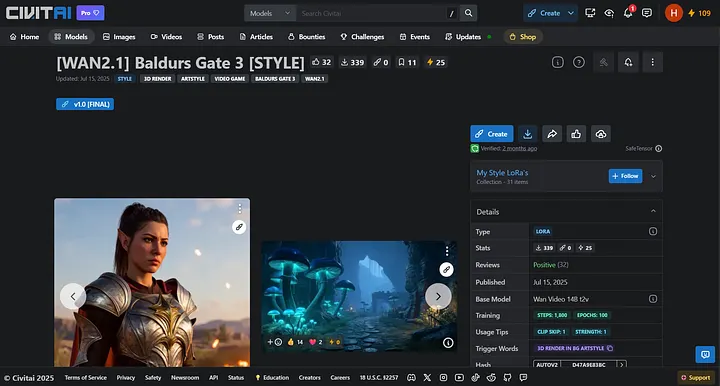

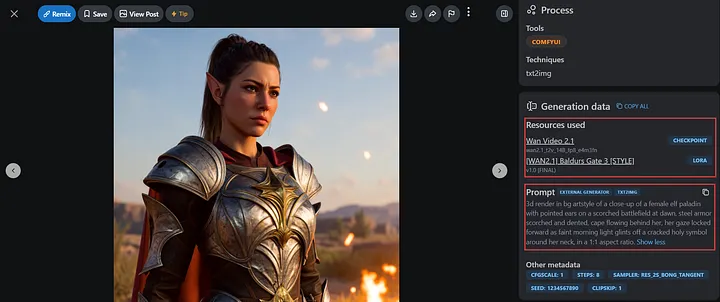

Using game design as an example, if you want to create a character with a style similar to Baldur’s Gate 3, you can directly try the LoRA [[WAN2.1] Baldur’s Gate 3 [STYLE]]([WAN2.1] Baldur’s Gate 3 [STYLE]).

[WAN2.1]Baldur’s Gate 3 [STYLE] Page

[WAN2.1]Baldur’s Gate 3 [STYLE] Page

However, please note that for models on the Civitai platform, WaveSpeedAI will not support invoking LoRA models using the <owner>/<model-name> format.

They can only be called via URL. Therefore, make sure to view the model information before invoking it.

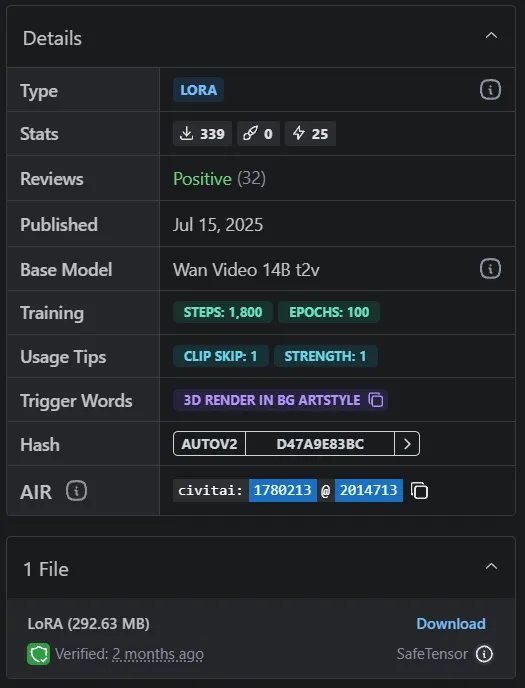

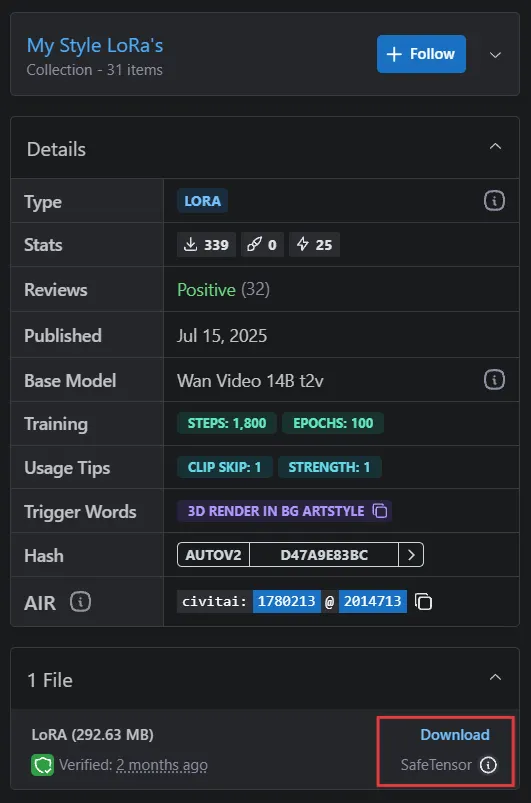

Details of LoRA Model

Details of LoRA Model

In the model’s Details section, you can see various information about the model.

The main items to focus on are Base Model and Trigger Words. Here, we see that this LoRA’s base model is Wan Video 14B t2v, and the trigger word is** 3d render in bg artstyle**.

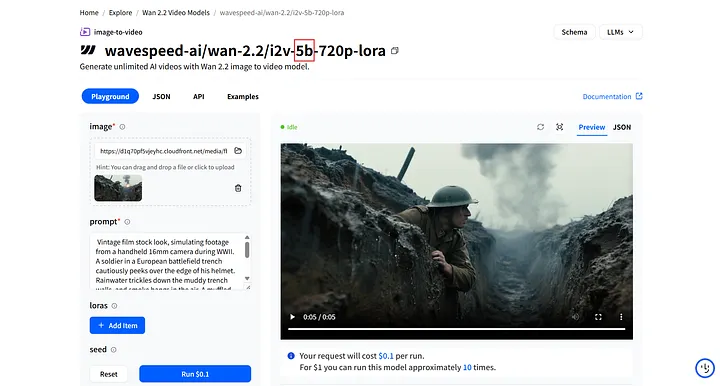

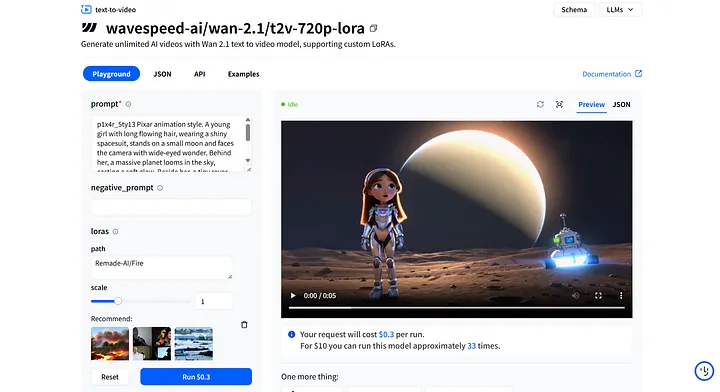

Open WaveSpeedAI and look for wavespeed-ai/wan-2.1/t2v-720p-lora. Of course, you can also choose other models that support invoking LoRA (Such as wavespeed-ai/wan-2.1/i2v-720p-lora).

wavespeed-ai/wan-2.1/t2v-720p-lora Page

wavespeed-ai/wan-2.1/t2v-720p-lora Page

Same as on the Hugging Face platform, you only need to modify the prompt and add the LoRA trigger word in Prompt, then include the URL for calling the LoRA model in path.

Use scale to control how much the LoRA influences the base model (the default 1 is usually sufficient. If it feels too weak or too strong, make small adjustments), and finally use seed for reproduction and comparison.

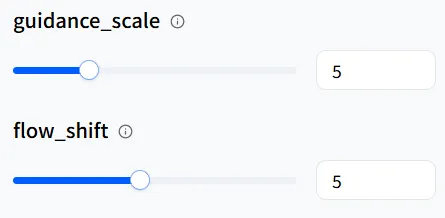

Some models have specific parameters, but on WaveSpeedAI, we’ve already set default values for you. Using them directly will give you good results!

If you want to refine details further, you can try adjusting them. However, please note that for parameters like num_inference_steps, the higher the value, the more noticeable the increase in video generation time will be.

Here, you’ll find the download section for the LoRA model. Make sure to choose the SafeTensor model type for proper functionality.

Dowload LoRA

Dowload LoRA

Right-click Download, then copy the link address — this is the URL you’ll use to invoke the LoRA model. Likewise, in the Playground of wavespeed-ai/wan-2.1/t2v-720p-lora, find the loras section, click + Add Item, and paste the URL you just copied into the path.

Paste in path

Paste in path

If you’re not sure how to use LoRA more effectively, you can check out references on Civitai. Model authors often provide examples that you can click on and view.

Example Page with Resources and Prompt

Example Page with Resources and Prompt

Here, we’ll copy the prompt from the author’s example to try creating our own game character.

The Result we made!

Isn’t it amazing? The generated result might vary slightly from the author’s, but you can adjust the prompt toward your goal (clarify style, materials, camera, and mood, and add or remove modifiers as needed) to gradually reach your desired effect. After all, the most meaningful works are not copies of others but those that always showcase your own unique texture and style — this is exactly where the unspoken understanding between LoRA and your creation lies.

Conclusion

By this point, you have learned how to use the LoRA models you prefer on WaveSpeedAI. But please remember, LoRA won’t make aesthetic choices for you. It only stabilizes details after you’ve set the direction. What truly makes a work unique is always your taste and imagination.

So be bold — try, learn, and keep improving. When you share your first results on Inspiration and grow with the community, you’ll see that efficiency is just the beginning. Having your style recognized is the real goal.

Wishing you smooth creation and success as you envisioned!

© 2025 WaveSpeedAI. All rights reserved.