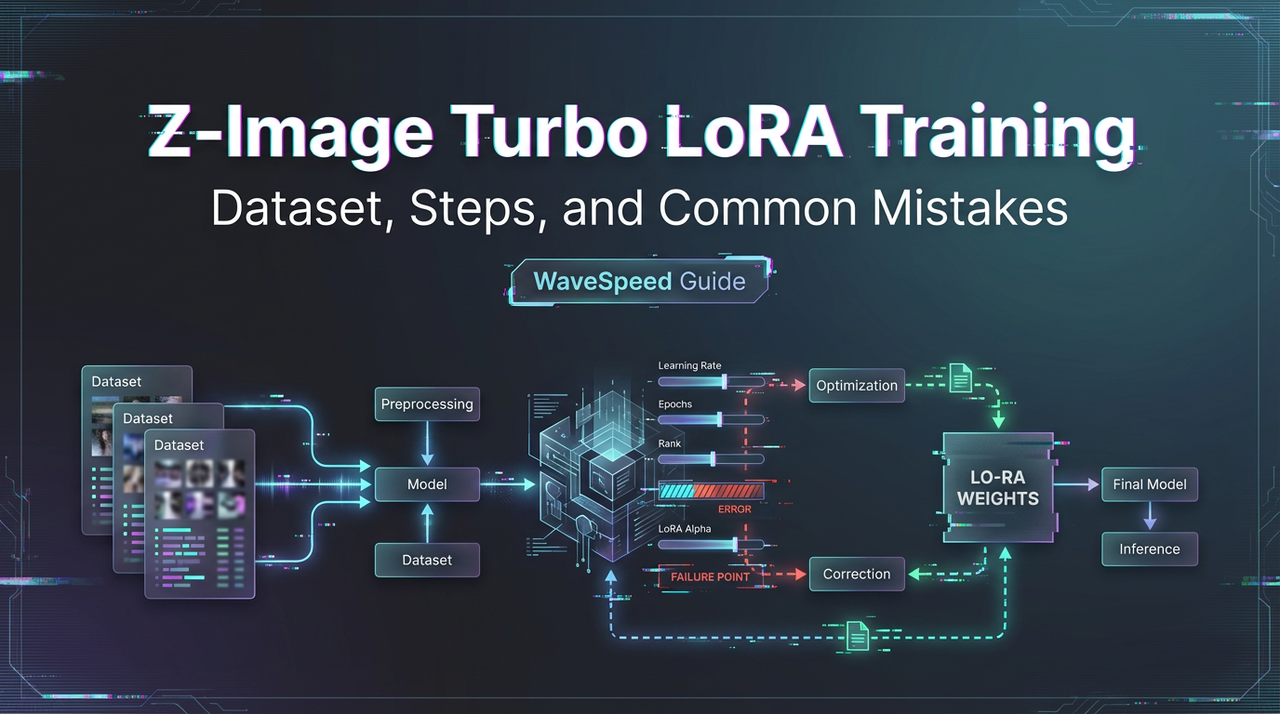

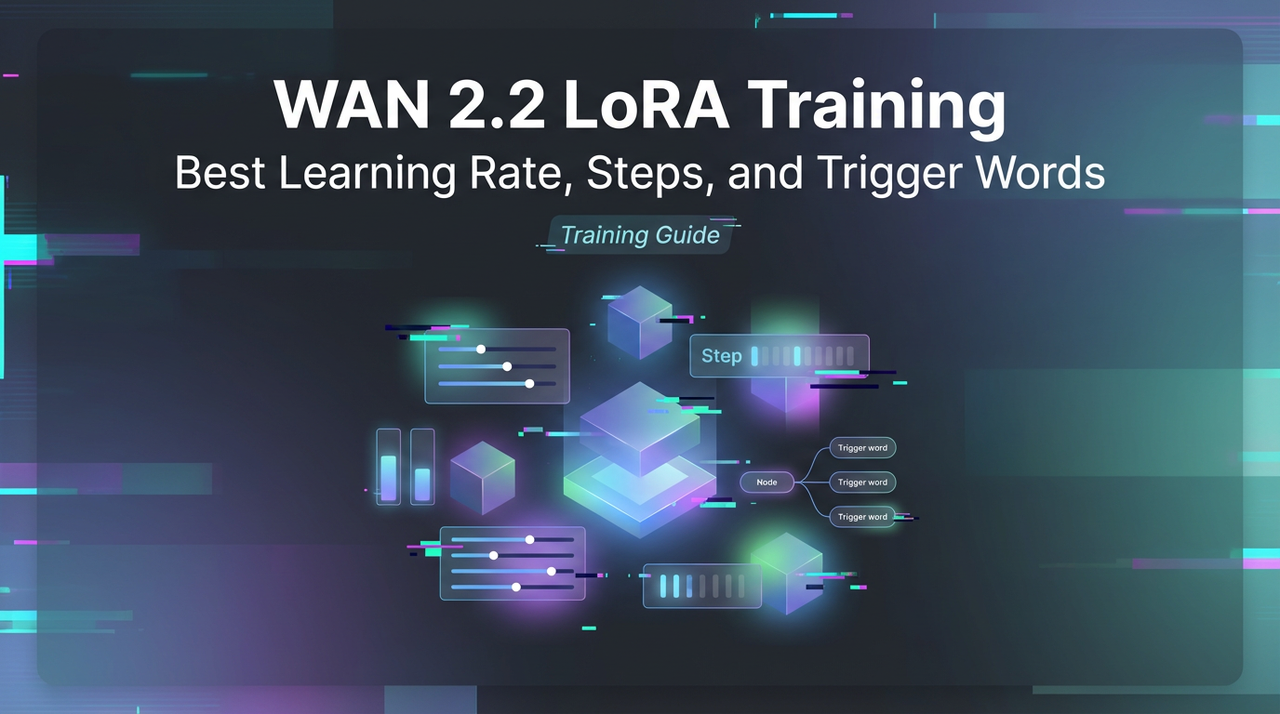

WAN 2.2 LoRA Training Settings: Best Learning Rate, Steps, and Trigger Words

Hey my friends. Do you know? I liked how WAN 2.2 handled skin and lighting, but my usual LoRA training habits didn’t translate cleanly. Faces came out too glossy, and the model kept pulling backgrounds into the same soft studio look. It wasn’t “wrong,” just not mine. So in early January 2026, I ran a handful of short experiments to find WAN 2.2–specific LoRA training settings that felt sane. Nothing flashy. Just enough to dial down the plastic shine, hold a subject steady, and still let the base model breathe.

If you’re looking for a quick template: this isn’t that. I’m sharing what held up over multiple runs, where I hesitated, and how I adjusted. The target keyword here is clear, WAN 2.2 LoRA training settings, but the goal is calmer work, not a new rabbit hole.

Why WAN LoRA Differs

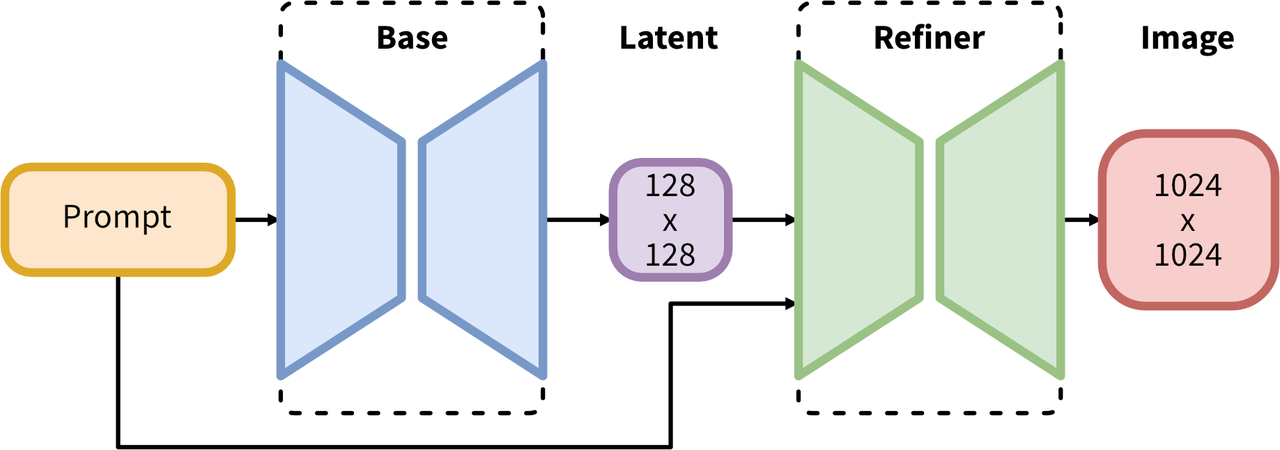

I noticed WAN 2.2 behaves like a very opinionated SDXL checkpoint: it’s tuned for crisp portraits, smooth gradients, and cinematic light. When I trained LoRAs the way I do on plainer SDXL bases, WAN kept pushing my results back toward that polished studio vibe.

Field notes:

- Prompt gravity is strong. Even light weights (0.4–0.6) pull toward clean skin and symmetrical framing.

- Color clustering shows up early. If your dataset leans warm, WAN amplifies it.

- Backgrounds homogenize. Without nudges, it defaults to shallow depth of field and soft bokeh, no matter what you fed it.

What changed in practice: I lowered learning rates, used more regularization images than usual, and kept captions boring on purpose. WAN 2.2 rewards restraint. When I tried to “teach” style and subject at the same time, overfit crept in fast.

If you’re coming from SD 1.5 LoRA habits, think: fewer clever tricks, more controlled baselines. If you’re used to SDXL, go a touch slower than normal and bake in regularization sooner.

Dataset Size Guide

I ran four passes with curated portrait sets (Jan 5–12, 2026), each with tidy captions and mixed lighting. Here’s what held up:

- 8–12 images: Enough to anchor a specific person or product silhouette. Use strong regularization. Keep compositions varied.

- 15–30 images: Sweet spot for single-subject identity with mild style. Add 20–40% non-portrait shots if you want backgrounds to generalize.

- 40–80 images: Useful when you’re encoding a consistent brand look or a multi-angle object line. You’ll need careful captions and more steps.

Things that mattered more than raw count:

- Pose diversity over location diversity. WAN generalizes locations fine: it struggles when every shot is the same angle.

- Exposure balance. If half your set is underexposed, WAN darkens everything later. I standardized histograms before training.

- Caption simplicity. Descriptive, not poetic. “subject_token, denim jacket, window light, medium close-up” beats “moody candid portrait near a rainy window.”

For identity LoRAs, I landed on 12–20 images as a dependable floor. For style LoRAs, 30–50 gave me room to breathe without collapsing to WAN’s default portrait sheen.

LR/Steps Baseline

The WAN 2.2 LoRA training settings that felt stable for me (Kohya-ss and SDXL base):

- Rank (dim): 16–32. I default to 16 for identity, 32 for style.

- Alpha: match dim (e.g., 16/16). Lower alpha made results brittle.

- Optimizer: AdamW with weight_decay 0.01.

- Learning rate: 5e-5 for identity, 7e-5 to 1e-4 for style. WAN punishes high LR with plasticky skin and loss spikes.

- Scheduler: cosine with warmup. Warmup 5% of total steps.

- Batch size: 2–4 (A100/4090). Gradient accumulation to simulate 8 if needed.

- Resolution: SDXL-native 1024 on the long side with bucketing (e.g., 1024×768, 1024×1024). Don’t upsize: it only memorizes noise.

- Epochs/steps: I stop by steps, not epochs.

- 12–20 images: 1,200–2,000 steps

- 30–50 images: 2,000–3,500 steps

- 60–80 images: 3,500–5,000 steps

Sanity checks I used:

- Save every 200–400 steps and preview with a fixed prompt + seed.

- If samples sharpen too fast before step 600, LR is high.

- If identity doesn’t lock by ~1,400 steps on a 20-image set, captions or regularization are off more than LR.

These numbers won’t win a leaderboard, but they resist WAN’s tendency to sand everything smooth.

Trigger Word Strategy

I kept triggers minimal. WAN already has a strong prior: stacking cute tokens just adds noise.

What I did:

- One instance token + one class token. Example: “sora_person” as the instance, “person” or “woman/man” as the class in captions.

- Put the instance token at the start of each caption. Keep it lowercase, one word if you can.

- Avoid style tokens in the same LoRA unless you truly want a style LoRA. Mixing identity and style in WAN 2.2 got muddy fast.

In prompts, I only call the LoRA and the instance token, then layer gentle steering:

- lora: name at 0.5–0.8

- instance token early in the prompt

- style words late and light (“natural light, clean color, minimal retouch”)

I tried invented “WAN-style” triggers out of curiosity. They didn’t help. The base already does that part, the LoRA should carve out what you need, not re-announce what WAN 2.2 is good at.

Regularization Images

This was the quiet hero. I used 1–3x regularization images per training image, class-matched to captions.

- For identity LoRAs: 20–60 reg images labeled as the same class (“person”). I generated them from WAN 2.2 itself with plain prompts: “photo of a person, neutral background, medium close-up, natural light.”

- For object LoRAs: reg images per product class (“shoe,” “bottle,” “chair”). Keep them accurate: don’t mix classes.

Why it mattered: WAN 2.2 likes to imprint its portrait aesthetic on everything. Reg images gave it permission to keep the base’s range while letting the LoRA hold identity. Without them, my LoRAs over-accented skin smoothing and bokeh, then refused to leave.

Settings that felt right:

Settings that felt right:

- Keep reg images visually bland and well-exposed.

- Don’t caption reg images with instance tokens: only the class.

- Mix 10–20% of training batches with reg images throughout (not just at the start).

If you’re short on time, add reg images before you tweak the optimizer. It’s the bigger lever here.

Overfit Detection

I didn’t rely on loss alone. WAN hides overfit behind pretty samples. These were my tells:

- Prompt inertia: changing the prompt barely changes the output. Everything drifts back to the same lens and background.

- Skin plasticity: pores vanish uniformly, especially around cheeks and foreheads, even with gritty lighting prompts.

- Pose echoing: repeated shoulders/neck angles across varied seeds.

- Color lock: a warm tint that clings across different white-balance cues.

Quick checks I ran every 200–400 steps:

- Adversarial prompt: switch to “harsh overhead office light, fluorescent, unflattering” and see if texture returns.

- Background flip: force “busy street, cluttered shelves” to test composition flexibility.

- Negative prompt pressure: add “over-smooth skin, plastic texture, heavy retouch” and see if it listens.

If two of those tests failed in a row, I rolled back to the previous checkpoint and either added more reg images or dropped LR by a notch.

Fix Collapses

I hit two kinds of collapse: identity melt and style lock.

When identity melted (faces drifted, eyes misaligned):

- Lower LR one step (e.g., 7e-5 → 5e-5).

- Increase rank from 16 to 32 only if the dataset has enough angles: otherwise it memorizes poses, not identity.

- Tighten captions: cut adjectives, keep focal length hints, keep instance token first.

- Add 10–20 more reg images of the same class.

When style locked (everything looked like WAN’s default studio portrait):

- Add non-portrait shots to the dataset (environmental, hands, partial body).

- Increase steps by 400–800 with cosine schedule: don’t spike LR.

- Reduce LoRA weight at inference (0.8 → 0.5) and nudge guidance lower (CFG 5–6 → 3.5–4.5). WAN responds well to lower CFG.

- If using noise offset or heavy color aug, dial them back. WAN already stabilizes color: extra aug made my outputs muddy.

Other knobs that helped:

- Gradient clipping at 1.0 to avoid sudden spikes.

- EMA off for small runs: with tiny datasets, EMA made identity lag behind previews.

- Seed discipline: preview with a fixed seed every time. Small changes are easier to judge when everything else stands still.

Export & Reuse

A few habits saved me time later:

- Save incremental checkpoints with clear names: model, rank, LR, steps, and date. Example: wan22_lora_id_r16_lr5e-5_s1800_2026-01-09.safetensors.

- Keep the training prompt, validation prompt, and seed in the LoRA metadata if your tool supports it. Future me always thanks past me.

- Version-sticky usage: LoRAs trained on WAN 2.2 worked best on WAN 2.2 and close siblings. They were usable on other SDXL bases, but color and skin handling shifted. I treat them as “WAN-first.”

- Inference defaults that felt good:

- LoRA weight 0.5–0.8 (identity), 0.3–0.6 (style overlay)

- CFG 3.5–5.5

- 30–40 steps with a stable sampler (DPM++ 2M Karras worked fine)

- Keep prompts short: WAN hears subtle nudges

If you want to merge LoRAs: I had better luck stacking small, single-purpose LoRAs (identity at 0.6 + mild color look at 0.3) than training one big “everything” LoRA. WAN respects modularity.

For more detailed WAN 2.2 workflows and examples, check out the official ComfyUI documentation.

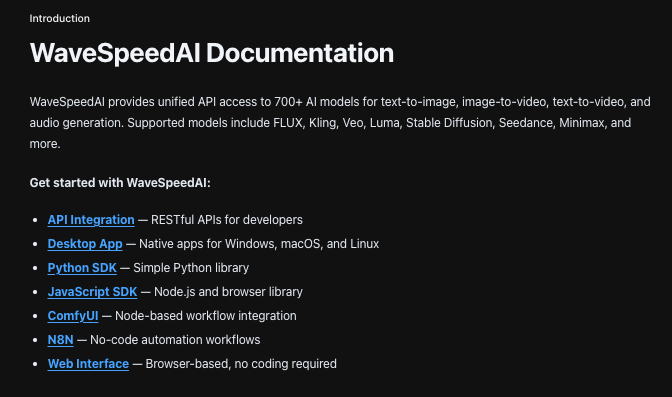

For training, I still prefer running things locally where I can see every knob. But when it comes to inference, model routing, or switching between base models without juggling APIs, you can try our WaveSpeed. It keeps different models behind one consistent endpoint so I can focus on prompts and outputs instead of infrastructure.

For training, I still prefer running things locally where I can see every knob. But when it comes to inference, model routing, or switching between base models without juggling APIs, you can try our WaveSpeed. It keeps different models behind one consistent endpoint so I can focus on prompts and outputs instead of infrastructure.

Related Articles

Run GLM-4.7-Flash Locally: Ollama, Mac & Windows Setup

Access GLM-4.7-Flash via WaveSpeed API

GLM-4.7-Flash API: Chat Completions & Streaming Quick Start

GLM-4.7-Flash vs GLM-4.7: Which One Fits Your Project?

GLM-4.7-Flash: Release Date, Free Tier & Key Features (2026)