Kling Reference-to-Video: Generate New Videos from Your Subjects — Now on WaveSpeedAI

Introducing Kling Reference-to-Video on WaveSpeedAI

WaveSpeedAI is thrilled to introduce Kling Reference-to-Video, a powerful capability within Kuaishou’s unified multimodal video model Kling Omni Video O1. This mode allows you to generate entirely new video content based on subject reference images or videos, while maintaining consistent appearance, identity, and scene logic across all frames.

Whether you’re producing character-driven content, product demos, or creative cinematic scenes, Kling Reference-to-Video lets you place your subjects into new environments and actions — all with stable, accurate identity reconstruction.

Key Features of Kling Reference-to-Video

1. Multi-Reference Subject Creation

Kling Reference-to-Video can build subjects using multiple reference images, allowing the model to:

- Extract features from characters, props, or scenes

- Maintain identity consistency across generated videos

- Create fresh scenarios using familiar subjects

This ensures that the generated video looks like the same person, object, or environment from your reference inputs.

2. Creative Freedom with Identity Preservation

With Kling Reference-to-Video, you can generate completely new content while keeping your subject intact:

- New poses and motions

- Different environments and scenarios

- Various camera angles and movements

- Cinematic reinterpretations of your characters

Your subject stays consistent — no distortion, no unwanted drift.

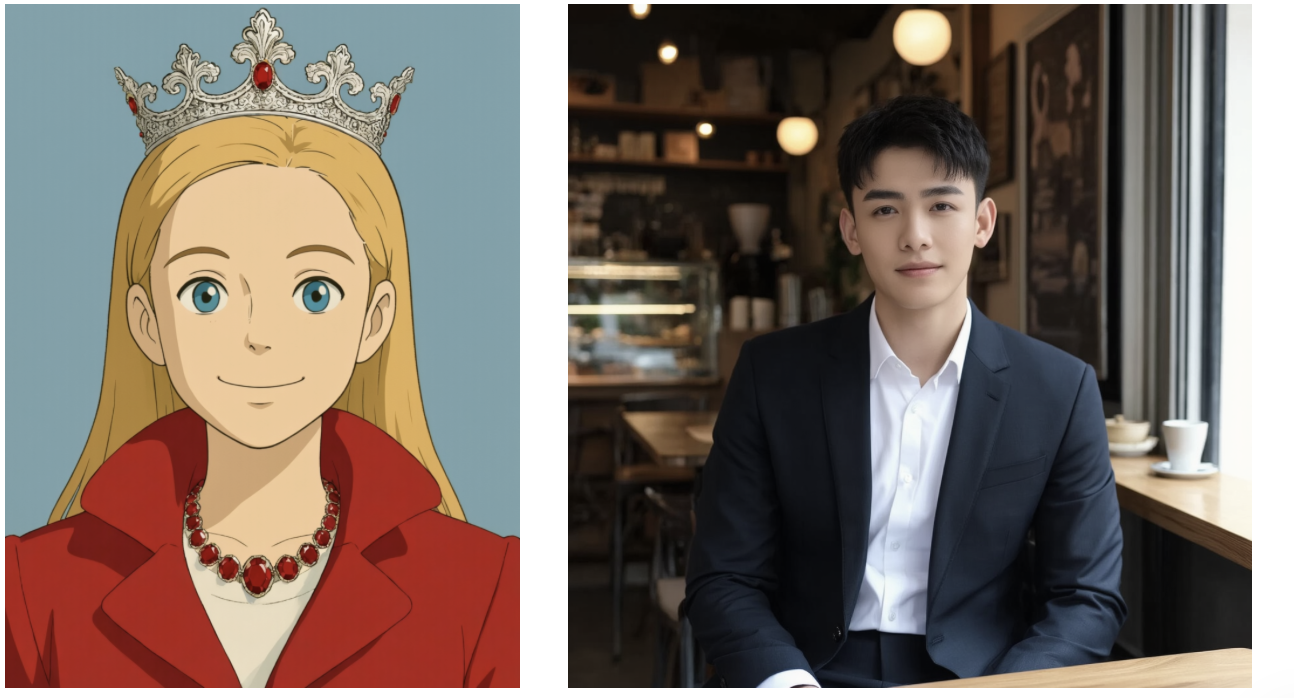

prompt:Use image 1 as a blonde princess-like girl in red coat and jeweled crown, and image 2 as a well-dressed young man in a dark suit. They walk side by side through a lively Tokyo street at night, similar to Shibuya: neon signs, shopfronts, billboards, and a soft flow of pedestrians around them. The camera moves in a smooth tracking shot in front of them at chest level as they walk toward the viewer, chatting and smiling naturally. Street lights and neon reflections shimmer on the pavement, background slightly blurred with shallow depth of field, overall mood warm, modern, and slightly dreamy, keeping the faces clearly recognizable from the reference images.

3. Subject Consistency Technology

Powered by advanced feature extraction, the model ensures:

- Stable appearance across every frame

- Consistent clothing, accessories, and props

- Preserved facial features, expressions, and body structure

- Coherent background and environmental elements

This makes Kling Reference-to-Video ideal for storytelling, product videos, and brand identity workflows.

Why Kling Reference-to-Video Matters

Reference-driven video creation unlocks powerful opportunities for:

- Character-based storytelling

- Product advertisement and motion showcases

- Digital avatars and virtual influencers

- Animation and pre-visualization

- Branded content with consistent identity

Traditionally, creating such videos requires complex 3D modeling, manual animation, or expensive production pipelines.

Kling Reference-to-Video reduces this entire workflow down to a set of reference images and a single prompt.

Conclusion

Kling Reference-to-Video brings new creative possibilities to anyone working with characters, products, or visual concepts.

By combining multi-reference identity extraction with high-quality video generation, it allows creators to produce consistent, dynamic, and cinematic content — all from a few reference images.

WaveSpeedAI makes it effortless to use. No installation, no setup —Just open your browser and generate your first video.

👉 Try Kling Reference-to-Video on WaveSpeedAI today and bring your subjects to life.

Stay Connected

Discord Community | X (Twitter) | Open Source Projects | Instagram

© 2025 WaveSpeedAI. All rights reserved.